diff --git a/.github/workflows/auto_deploy_docs.yaml b/.github/workflows/auto_deploy_docs.yaml

new file mode 100644

index 0000000..3e7372d

--- /dev/null

+++ b/.github/workflows/auto_deploy_docs.yaml

@@ -0,0 +1,27 @@

+name: Auto Deploy Docs

+run-name: Edit by ${{ github.actor }} triggered docs deployment

+on:

+ push:

+ branches:

+ - 'master'

+ paths:

+ - '**.md'

+jobs:

+ Dispatch-Deploy-Workflow:

+ runs-on: ubuntu-latest

+ steps:

+

+ - name: Print out debug info

+ run: echo "Repo ${{ github.repository }} | Branch ${{ github.ref }} | Runner ${{ runner.os }} | Event ${{ github.event_name }}"

+

+ - name: Dispatch deploy workflow

+ uses: actions/github-script@v6

+ with:

+ github-token: ${{ secrets.GHA_CROSSREPO_WORKFLOW_TOKEN }}

+ script: |

+ await github.rest.actions.createWorkflowDispatch({

+ owner: 'hello-robot',

+ repo: 'hello-robot.github.io',

+ workflow_id: 'auto_deploy.yaml',

+ ref: '0.3',

+ })

diff --git a/extra.css b/extra.css

index 3880e81..2734197 100644

--- a/extra.css

+++ b/extra.css

@@ -2,36 +2,87 @@

--md-primary-fg-color: #122837;

--md-primary-fg-color--light: hsla(0,0%, 100%, 0.7);

--md-primary-fg-color--dark: hsla(0, 0%, 0%, 0.07);

- --md-primary-bg-color: hsla(341, 85%, 89%, 1.0);

+ --md-primary-bg-color: #FDF1F5;

--md-typeset-a-color: #0550b3;

- --md-code-hl-number-color: hsla(196, 86%, 29%, 1);

+ --md-code-hl-number-color: hsla(196, 86%, 29%, 1); /* Make code block magic numbers less bright in light theme */

+

}

[data-md-color-primary=hello-robot-dark]{

--md-primary-fg-color: #122837;

--md-primary-fg-color--light: hsla(0,0%, 100%, 0.7);

--md-primary-fg-color--dark: hsla(0, 0%, 0%, 0.07);

- --md-primary-bg-color: hsla(341, 85%, 89%, 1.0);

- --md-typeset-a-color: hsla(341, 85%, 89%, 1.0);

- --md-code-hl-number-color: hsla(196, 86%, 29%, 1);

+ --md-primary-bg-color: #FDF1F5;

+ --md-typeset-a-color: hsla(341, 85%, 89%, 1.0); /* Make links more visible in dark theme */

+}

+

+[data-md-color-primary=hello-robot-light] .md-typeset h1,

+.md-typeset h2 {

+ color: hsla(237, 100%, 28%, 1);

}

+[data-md-color-primary=hello-robot-dark] .md-typeset h1,

+.md-typeset h2 {

+ color: hsla(213, 100%, 68%, 1);

+}

[data-md-color-scheme="slate"] {

--md-hue: 210; /* [0, 360] */

}

+

+th, td {

+ border: 1px solid var(--md-typeset-table-color);

+ border-spacing: 0;

+ border-bottom: none;

+ border-left: none;

+ border-top: none;

+}

+

+.md-typeset__table {

+ line-height: 1;

+}

+

+.md-typeset__table table:not([class]) {

+ font-size: .74rem;

+ border-right: none;

+}

+

+.md-typeset__table table:not([class]) td,

+.md-typeset__table table:not([class]) th {

+ padding: 9px;

+}

+

+/* light mode alternating table bg colors */

+.md-typeset__table tr:nth-child(2n) {

+ background-color: #f8f8f8;

+}

+

+/* dark mode alternating table bg colors */

+[data-md-color-scheme="slate"] .md-typeset__table tr:nth-child(2n) {

+ background-color: hsla(var(--md-hue),25%,25%,1)

+}

+

+

/*

-Tables set to 100% width

-*/

+.md-header__topic:first-child {

+ font-weight: normal;

+}

+

+/* Indentation.

+div.doc-contents {

+ padding-left: 25px;

+ border-left: 4px solid rgba(230, 230, 230);

+ margin-bottom: 20px;

+}

.md-typeset__table {

- min-width: 100%;

+ min-width: 100%;

}

.md-typeset table:not([class]) {

- display: table;

+ display: table;

}

-

+*/

.shell-prompt code::before {

content: "$ ";

color: grey;

diff --git a/getting_started/quick_start_guide_re1.md b/getting_started/quick_start_guide_re1.md

index 94f51f7..426bf04 100644

--- a/getting_started/quick_start_guide_re1.md

+++ b/getting_started/quick_start_guide_re1.md

@@ -143,7 +143,7 @@ Once the robot has homed, let's write some quick test code:

```{.bash .shell-prompt}

ipython

```

-

+

Now let's move the robot around using the Robot API. Try typing in these interactive commands at the iPython prompt:

```{.python .no-copy}

@@ -177,7 +177,8 @@ robot.end_of_arm.move_to('stretch_gripper',-50)

robot.stow()

robot.stop()

```

-

+!!! note

+ The iPython interpreter also allows you to execute blocks of code in a single go instead of running commands line by line. To end the interpreter session, type exit() and press enter.

## Change Credentials

Finally, we recommend that you change the login credentials for the default user, hello-robot.

diff --git a/getting_started/quick_start_guide_re2.md b/getting_started/quick_start_guide_re2.md

index eb3e5ae..1bca9bb 100644

--- a/getting_started/quick_start_guide_re2.md

+++ b/getting_started/quick_start_guide_re2.md

@@ -194,6 +194,8 @@ robot.end_of_arm.move_to('stretch_gripper',-50)

robot.stow()

robot.stop()

```

+!!! note

+ The iPython interpreter also allows you to execute blocks of code in a single go instead of running commands line by line. To end the interpreter session, type exit() and press enter.

## Change Credentials

diff --git a/mkdocs.yml b/mkdocs.yml

index ecb3cf1..b7b75c5 100644

--- a/mkdocs.yml

+++ b/mkdocs.yml

@@ -101,7 +101,6 @@ nav:

- Basics:

- Introduction: ./stretch_body/tutorial_introduction.md

- Command line Tools: ./stretch_body/tutorial_command_line_tools.md

- - Stretch Body API: ./stretch_body/tutorial_stretch_body_api.md

- Robot Motion: ./stretch_body/tutorial_robot_motion.md

- Robot Sensors: ./stretch_body/tutorial_robot_sensors.md

- Advanced:

@@ -130,7 +129,7 @@ nav:

- FUNMAP: https://github.com/hello-robot/stretch_ros/tree/master/stretch_funmap

- Gazebo Basics: ./ros1/gazebo_basics.md

- Other Examples:

- - Teleoperate Stretch with a Node: ./ros1/example_1.md

+ - Mobile Base Velocity Control: ./ros1/example_1.md

- Filter Laser Scans: ./ros1/example_2.md

- Mobile Base Collision Avoidance: ./ros1/example_3.md

- Give Stretch a Balloon: ./ros1/example_4.md

diff --git a/ros1/README.md b/ros1/README.md

index f070023..487d027 100644

--- a/ros1/README.md

+++ b/ros1/README.md

@@ -17,12 +17,11 @@ This tutorial track is for users looking to get familiar with programming Stretc

| 4 | [Internal State of Stretch](internal_state_of_stretch.md) | Monitor the joint states of Stretch. |

| 5 | [RViz Basics](rviz_basics.md) | Visualize topics in Stretch. |

| 6 | [Navigation Stack](navigation_stack.md) | Motion planning and control for the mobile base using Nav stack. |

-| 7 | [MoveIt! Basics](moveit_basics.md) | Motion planning and control for the arm using MoveIt. |

-| 8 | [Follow Joint Trajectory Commands](follow_joint_trajectory.md) | Control joints using joint trajectory server. |

-| 9 | [Perception](perception.md) | Use the Realsense D435i camera to visualize the environment. |

-| 10 | [ArUco Marker Detection](aruco_marker_detection.md) | Localize objects using ArUco markers. |

-| 11 | [ReSpeaker Microphone Array](respeaker_microphone_array.md) | Learn to use the ReSpeaker Microphone Array. |

-| 12 | [FUNMAP](https://github.com/hello-robot/stretch_ros/tree/master/stretch_funmap) | Fast Unified Navigation, Manipulation and Planning. |

+| 7 | [Follow Joint Trajectory Commands](follow_joint_trajectory.md) | Control joints using joint trajectory server. |

+| 8 | [Perception](perception.md) | Use the Realsense D435i camera to visualize the environment. |

+| 9 | [ArUco Marker Detection](aruco_marker_detection.md) | Localize objects using ArUco markers. |

+| 10 | [ReSpeaker Microphone Array](respeaker_microphone_array.md) | Learn to use the ReSpeaker Microphone Array. |

+| 11 | [FUNMAP](https://github.com/hello-robot/stretch_ros/tree/master/stretch_funmap) | Fast Unified Navigation, Manipulation and Planning. |

## Other Examples

diff --git a/ros1/autodocking_nav_stack.md b/ros1/autodocking_nav_stack.md

index e5d75d8..bd4fd23 100644

--- a/ros1/autodocking_nav_stack.md

+++ b/ros1/autodocking_nav_stack.md

@@ -71,7 +71,7 @@ The third child of the root node is the `Move to dock` action node. This is a si

The fourth and final child of the sequence node is another `fallback` node with two children - the `Charging?` condition node and the `Move to predock` action node with an `inverter` decorator node (+/- sign). The `Charging?` condition node is a subscriber that checks if the 'present' attribute of the `BatteryState` message is True. If the robot has backed up correctly into the docking station and the charger port latched, this node should return SUCCESS and the autodocking would succeed. If not, the robot moves back to the predock pose through the `Move to predock` action node and tries again.

## Code Breakdown

-Let's jump into the code to see how things work under the hood. Follow along [here]() (TODO after merge) to have a look at the entire script.

+Let's jump into the code to see how things work under the hood. Follow along [here](https://github.com/hello-robot/stretch_ros/blob/noetic/stretch_demos/nodes/autodocking_bt.py) to have a look at the entire script.

We start off by importing the dependencies. The ones of interest are those relating to py-trees and the various behaviour classes in autodocking.autodocking_behaviours, namely, MoveBaseActionClient, CheckTF and VisualServoing. We also created custom ROS action messages for the ArucoHeadScan action defined in the action directory of stretch_demos package.

```python

diff --git a/ros1/example_4.md b/ros1/example_4.md

index 534bd17..0a65e08 100644

--- a/ros1/example_4.md

+++ b/ros1/example_4.md

@@ -172,7 +172,7 @@ The next line, `rospy.init_node(NAME, ...)`, is very important as it tells rospy

Instantiate class with `Balloon()`.

-Give control to ROS with `rospy.spin()`. This will allow the callback to be called whenever new messages come in. If we don't put this line in, then the node will not work, and ROS will not process any messages.

+The `rospy.rate()` is the rate at which the node is going to publish information (10 Hz).

```python

while not rospy.is_shutdown():

diff --git a/ros1/example_8.md b/ros1/example_8.md

index 518f8de..e9bb6d3 100644

--- a/ros1/example_8.md

+++ b/ros1/example_8.md

@@ -9,7 +9,7 @@ This example will showcase how to save the interpreted speech from Stretch's [Re

Begin by running the `respeaker.launch` file in a terminal.

```{.bash .shell-prompt}

-roslaunch respeaker_ros sample_respeaker.launch

+roslaunch respeaker_ros respeaker.launch

```

Then run the [speech_text.py](https://github.com/hello-robot/stretch_tutorials/blob/noetic/src/speech_text.py) node. In a new terminal, execute:

diff --git a/ros1/follow_joint_trajectory.md b/ros1/follow_joint_trajectory.md

index 3a5f6b9..0e32373 100644

--- a/ros1/follow_joint_trajectory.md

+++ b/ros1/follow_joint_trajectory.md

@@ -293,12 +293,12 @@ You can also actuate a single joint for the Stretch. Below is the list of joints

```{.bash .no-copy}

############################# JOINT LIMITS #############################

-joint_lift: lower_limit = 0.15, upper_limit = 1.10 # in meters

+joint_lift: lower_limit = 0.00, upper_limit = 1.10 # in meters

wrist_extension: lower_limit = 0.00, upper_limit = 0.50 # in meters

joint_wrist_yaw: lower_limit = -1.75, upper_limit = 4.00 # in radians

-joint_head_pan: lower_limit = -2.80, upper_limit = 2.90 # in radians

-joint_head_tilt: lower_limit = -1.60, upper_limit = 0.40 # in radians

-joint_gripper_finger_left: lower_limit = -0.35, upper_limit = 0.165 # in radians

+joint_head_pan: lower_limit = -3.90, upper_limit = 1.50 # in radians

+joint_head_tilt: lower_limit = -1.53, upper_limit = 0.79 # in radians

+joint_gripper_finger_left: lower_limit = -0.6, upper_limit = 0.6 # in radians

# INCLUDED JOINTS IN POSITION MODE

translate_mobile_base: No lower or upper limit. Defined by a step size in meters

@@ -410,4 +410,4 @@ trajectory_goal.trajectory.header.stamp = rospy.Time(0.0)

trajectory_goal.trajectory.header.frame_id = 'base_link'

```

-Set `trajectory_goal` as a `FollowJointTrajectoryGoal` and define the joint names as a list. Then `trajectory_goal.trajectory.points` set by your list of points. Specify the coordinate frame that we want (*base_link*) and set the time to be now.

\ No newline at end of file

+Set `trajectory_goal` as a `FollowJointTrajectoryGoal` and define the joint names as a list. Then `trajectory_goal.trajectory.points` set by your list of points. Specify the coordinate frame that we want (*base_link*) and set the time to be now.

diff --git a/ros2/README.md b/ros2/README.md

index 408214d..f0b475d 100644

--- a/ros2/README.md

+++ b/ros2/README.md

@@ -29,8 +29,6 @@ This tutorial track is for users looking to get familiar with programming Stretc

diff --git a/ros2/align_to_aruco.md b/ros2/align_to_aruco.md

index 65e60ab..ddc235c 100644

--- a/ros2/align_to_aruco.md

+++ b/ros2/align_to_aruco.md

@@ -84,7 +84,7 @@ The joint_states_callback is the callback method that receives the most recent j

self.joint_state = joint_state

```

-The copute_difference() method is where we call the get_transform() method from the FrameListener class to compute the difference between the base_link and base_right frame with an offset of 0.5 m in the negative y-axis.

+The compute_difference() method is where we call the get_transform() method from the FrameListener class to compute the difference between the base_link and base_right frame with an offset of 0.5 m in the negative y-axis.

```python

def compute_difference(self):

self.trans_base, self.trans_camera = self.node.get_transforms()

@@ -103,7 +103,7 @@ To compute the (x, y) coordinates of the SE2 pose goal, we compute the transform

base_position_y = P_base[1, 0]

```

-From this, it is relatively straightforward to compute the angle phi and the euclidean distance dist. We then compute the angle z_rot_base to perform the last angle correction.

+From this, it is relatively straightforward to compute the angle **phi** and the euclidean distance **dist**. We then compute the angle z_rot_base to perform the last angle correction.

```python

phi = atan2(base_position_y, base_position_x)

diff --git a/ros2/deep_perception.md b/ros2/deep_perception.md

index 77a9848..019ab06 100644

--- a/ros2/deep_perception.md

+++ b/ros2/deep_perception.md

@@ -134,7 +134,7 @@ ros2 launch stretch_deep_perception stretch_detect_faces.launch.py

## Code Breakdown

-Ain't that something! If you followed the breakdown in object detection, you'll find that the only change if you are looking to detect faces, facial landmarks or estimat head pose instead of detecting objects is in using a different deep learning model that does just that. For this, we will explore how to use the OpenVINO toolkit. Let's head to the detect_faces.py [node](https://github.com/hello-robot/stretch_ros2/blob/iron/stretch_deep_perception/stretch_deep_perception/detect_faces.py) to begin.

+Ain't that something! If you followed the breakdown in object detection, you'll find that the only change if you are looking to detect faces, facial landmarks or estimate head pose instead of detecting objects is in using a different deep learning model that does just that. For this, we will explore how to use the OpenVINO toolkit. Let's head to the detect_faces.py [node](https://github.com/hello-robot/stretch_ros2/blob/iron/stretch_deep_perception/stretch_deep_perception/detect_faces.py) to begin.

In the main() method, we see a similar structure as with the object detction node. We first create an instance of the detector using the HeadPoseEstimator class from the [head_estimator.py](https://github.com/hello-robot/stretch_ros2/blob/iron/stretch_deep_perception/stretch_deep_perception/head_estimator.py) script to configure the deep learning models. Next, we pass this to an instance of the DetectionNode class from the detection_node.py script and call the main function.

```python

diff --git a/ros2/example_10.md b/ros2/example_10.md

index b556cf8..0a687ce 100644

--- a/ros2/example_10.md

+++ b/ros2/example_10.md

@@ -1,4 +1,4 @@

-# Example 10

+## Example 10

!!! note

ROS 2 tutorials are still under active development.

@@ -21,13 +21,13 @@ ros2 run rviz2 rviz2

Then run the tf2 broadcaster node to visualize three static frames.

```{.bash .shell-prompt}

-ros2 run stretch_ros_tutorials tf2_broadcaster

+ros2 run stretch_ros_tutorials tf_broadcaster

```

The GIF below visualizes what happens when running the previous node.

-  +

+

**OPTIONAL**: If you would like to see how the static frames update while the robot is in motion, run the stow command node and observe the tf frames in RViz.

@@ -35,9 +35,8 @@ The GIF below visualizes what happens when running the previous node.

```{.bash .shell-prompt}

ros2 run stretch_ros_tutorials stow_command

```

-

-  +

+

### The Code

@@ -222,13 +221,13 @@ ros2 launch stretch_core stretch_driver.launch.py

Then run the tf2 broadcaster node to create the three static frames.

```{.bash .shell-prompt}

-ros2 run stretch_ros_tutorials tf2_broadcaster

+ros2 run stretch_ros_tutorials tf_broadcaster

```

Finally, run the tf2 listener node to print the transform between two links.

```{.bash .shell-prompt}

-ros2 run stretch_ros_tutorials tf2_listener

+ros2 run stretch_ros_tutorials tf_listener

```

Within the terminal the transform will be printed every 1 second. Below is an example of what will be printed in the terminal. There is also an image for reference of the two frames.

@@ -247,7 +246,7 @@ rotation:

```

-  +

+

### The Code

diff --git a/ros2/example_2.md b/ros2/example_2.md

index a9001e7..675c83c 100644

--- a/ros2/example_2.md

+++ b/ros2/example_2.md

@@ -59,9 +59,11 @@ ros2 run stretch_ros_tutorials scan_filter

Then run the following command to bring up a simple RViz configuration of the Stretch robot.

```{.bash .shell-prompt}

-ros2 run rviz2 rviz2 -d `ros2 pkg prefix stretch_calibration`/rviz/stretch_simple_test.rviz

+ros2 run rviz2 rviz2 -d `ros2 pkg prefix stretch_calibration`/share/stretch_calibration/rviz/stretch_simple_test.rviz

```

-

+!!! note

+ If the laser scan points published by the scan or the scan_filtered topic are not visible in RViz, you can visualize them by adding them using the 'Add' button in the left panel, selecting the 'By topic' tab, and then selecting the scan or scan_filtered topic.

+

Change the topic name from the LaserScan display from */scan* to */filter_scan*.

diff --git a/ros2/example_4.md b/ros2/example_4.md

index c2143da..9dd0c78 100644

--- a/ros2/example_4.md

+++ b/ros2/example_4.md

@@ -9,7 +9,7 @@ Let's bring up Stretch in RViz by using the following command.

```{.bash .shell-prompt}

ros2 launch stretch_core stretch_driver.launch.py

-ros2 run rviz2 rviz2 -d `ros2 pkg prefix stretch_calibrtion`/rviz/stretch_simple_test.rviz

+ros2 run rviz2 rviz2 -d `ros2 pkg prefix stretch_calibration`/share/stretch_calibration/rviz/stretch_simple_test.rviz

```

In a new terminal run the following commands to create a marker.

diff --git a/ros2/follow_joint_trajectory.md b/ros2/follow_joint_trajectory.md

index 145b040..4ff63d9 100644

--- a/ros2/follow_joint_trajectory.md

+++ b/ros2/follow_joint_trajectory.md

@@ -1,6 +1,6 @@

## FollowJointTrajectory Commands

!!! note

- ROS 2 tutorials are still under active development.

+ ROS 2 tutorials are still under active development. For this exercise you'll need to have Ubuntu 22.04 and ROS Iron for it to work completly.

Stretch driver offers a [`FollowJointTrajectory`](http://docs.ros.org/en/api/control_msgs/html/action/FollowJointTrajectory.html) action service for its arm. Within this tutorial, we will have a simple FollowJointTrajectory command sent to a Stretch robot to execute.

diff --git a/ros2/getting_started.md b/ros2/getting_started.md

index 3d0af01..4a58b9c 100644

--- a/ros2/getting_started.md

+++ b/ros2/getting_started.md

@@ -22,13 +22,13 @@ We will disable ROS1 by commenting out the ROS1 related lines by adding '#' in f

Save this configuration using **Ctrl + S**. Close out of the current terminal and open a new one. ROS2 is now enabled!

## Refreshing the ROS2 workspace

-While Stretch ROS2 is in beta, there will be frequent updates to the ROS2 software. Therefore, it makes sense to refresh the ROS2 software to the latest available release. In the ROS and ROS2 world, software is organized into "ROS Workspaces", where packages can be developed, compiled, and be made available to run from the command line. We are going to refresh the ROS2 workspace, which is called "~/ament_ws" and available in the home directory. Follow the [Create a new ROS Workspace guide](https://docs.hello-robot.com/0.2/stretch-install/docs/ros_workspace/) to run the `stretch_create_ament_workspace.sh` script. This will delete the existing "~/ament_ws", create a new one with all of the required ROS2 packages for Stretch, and compile it.

+While Stretch ROS2 is in beta, there will be frequent updates to the ROS2 software. Therefore, it makes sense to refresh the ROS2 software to the latest available release. In the ROS and ROS2 world, software is organized into "ROS Workspaces", where packages can be developed, compiled, and be made available to run from the command line. We are going to refresh the ROS2 workspace, which is called "~/ament_ws" and available in the home directory. Follow the [Create a new ROS Workspace guide](https://docs.hello-robot.com/0.2/stretch-install/docs/ros_workspace/) to run the `stretch_create_ament_workspace.sh` script. This will delete the existing "~/ament_ws", create a new one with all of the required ROS2 packages for Stretch, and compile it. Also we need to take into account that building the workspace is different in ROS2, we need to type colcon build instead of catkin make for it to work.

## Testing Keyboard Teleop

We can test whether the ROS2 workspace was enabled successfully by testing out the ROS2 drivers package, called "stretch_core", with keyboard teleop. In one terminal, we'll launch Stretch's ROS2 drivers using:

```{.bash .shell-prompt}

-ros2 launch stretch_core stretch_driver.launch.py mode:=manipulation

+ros2 launch stretch_core stretch_driver.launch.py

```

In the second terminal, launch the keyboard teleop node using:

diff --git a/ros2/internal_state_of_stretch.md b/ros2/internal_state_of_stretch.md

index ccb3889..ddc311b 100644

--- a/ros2/internal_state_of_stretch.md

+++ b/ros2/internal_state_of_stretch.md

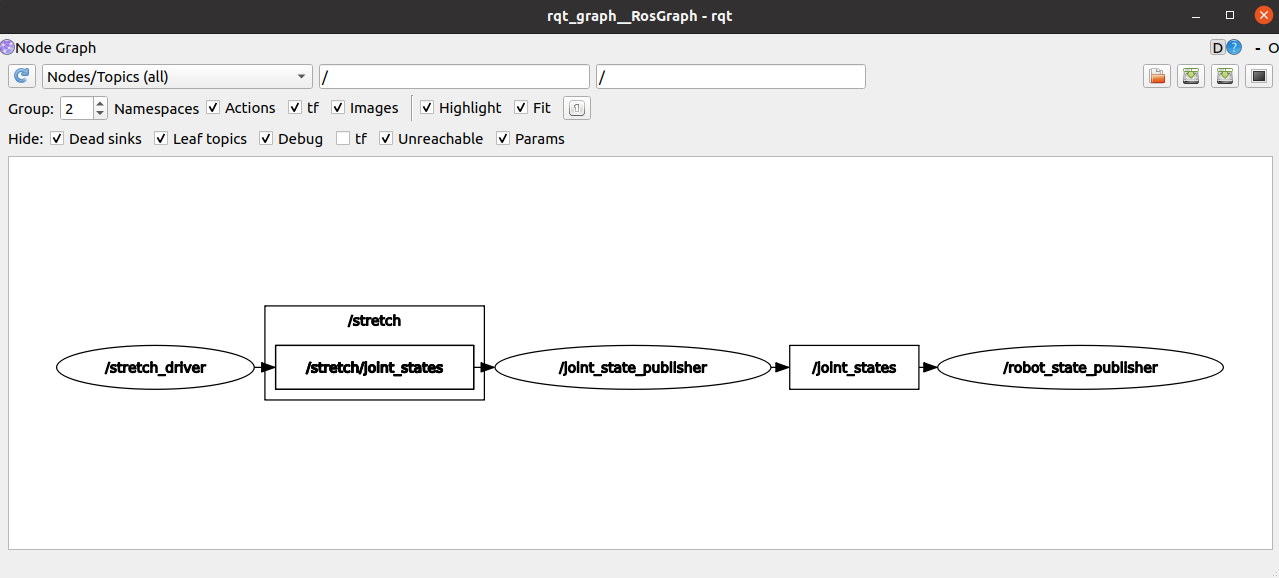

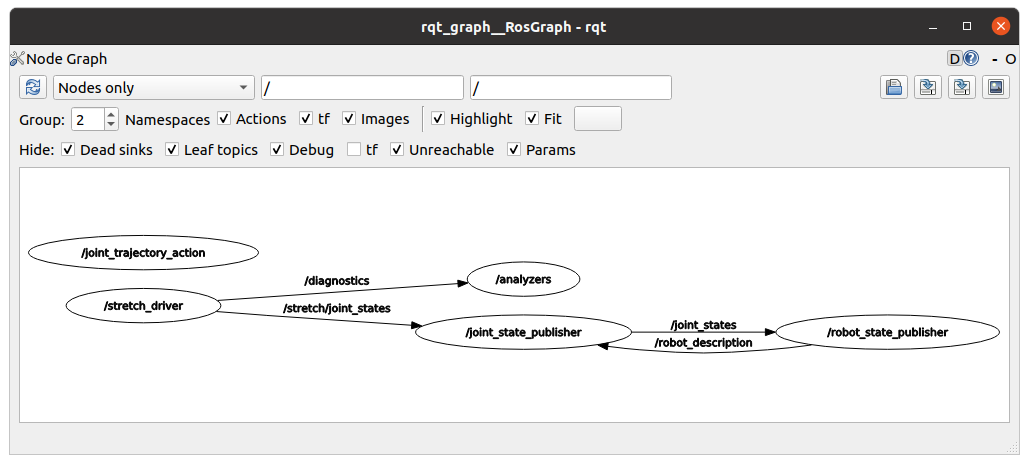

@@ -41,6 +41,10 @@ A powerful tool to visualize the ROS communication is through the rqt_graph pack

ros2 run rqt_graph rqt_graph

```

-

+

+

+

+

+

The graph allows a user to observe and affirm if topics are broadcasted to the correct nodes. This method can also be utilized to debug communication issues.

diff --git a/ros2/perception.md b/ros2/perception.md

new file mode 100644

index 0000000..7813e75

--- /dev/null

+++ b/ros2/perception.md

@@ -0,0 +1,48 @@

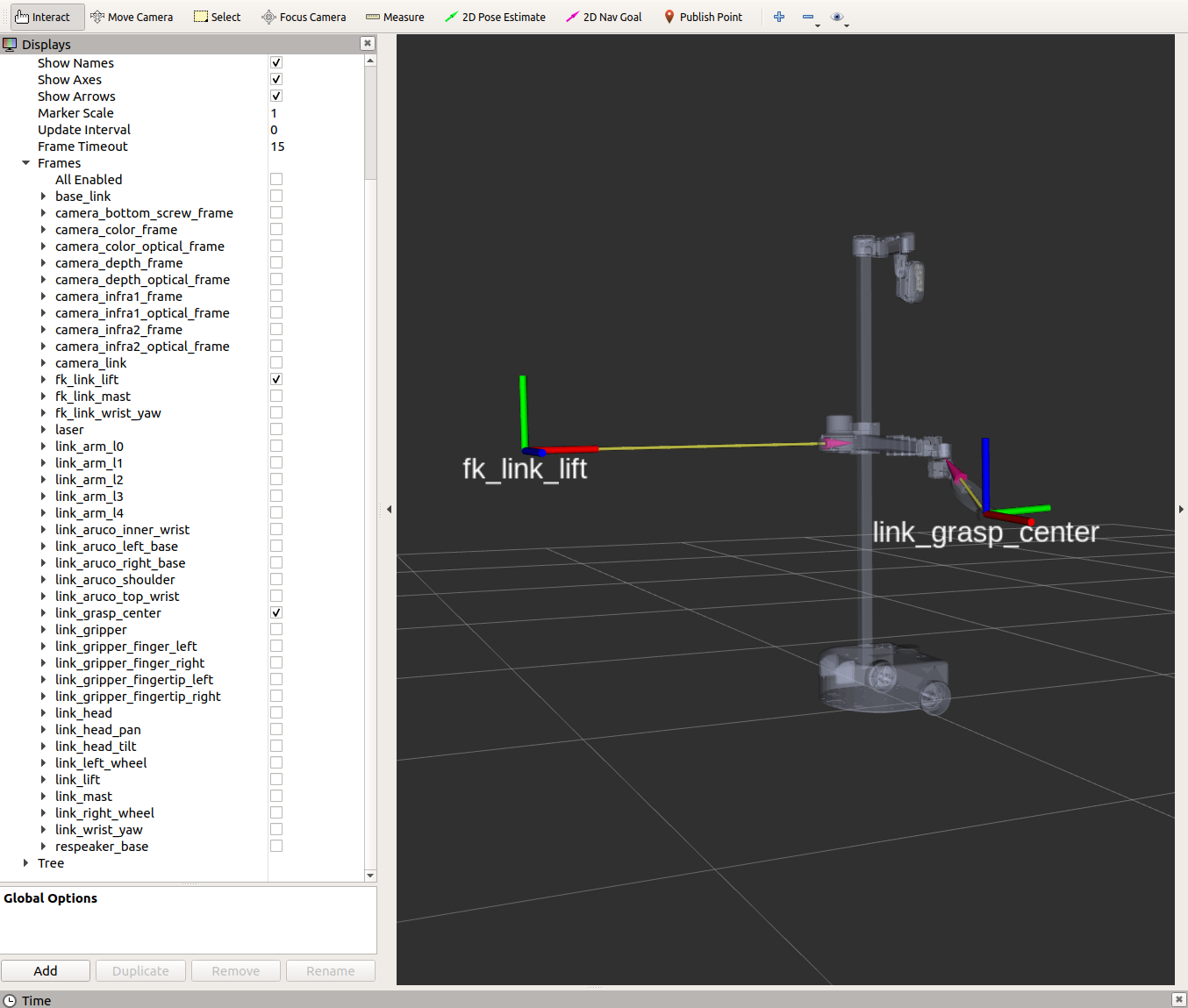

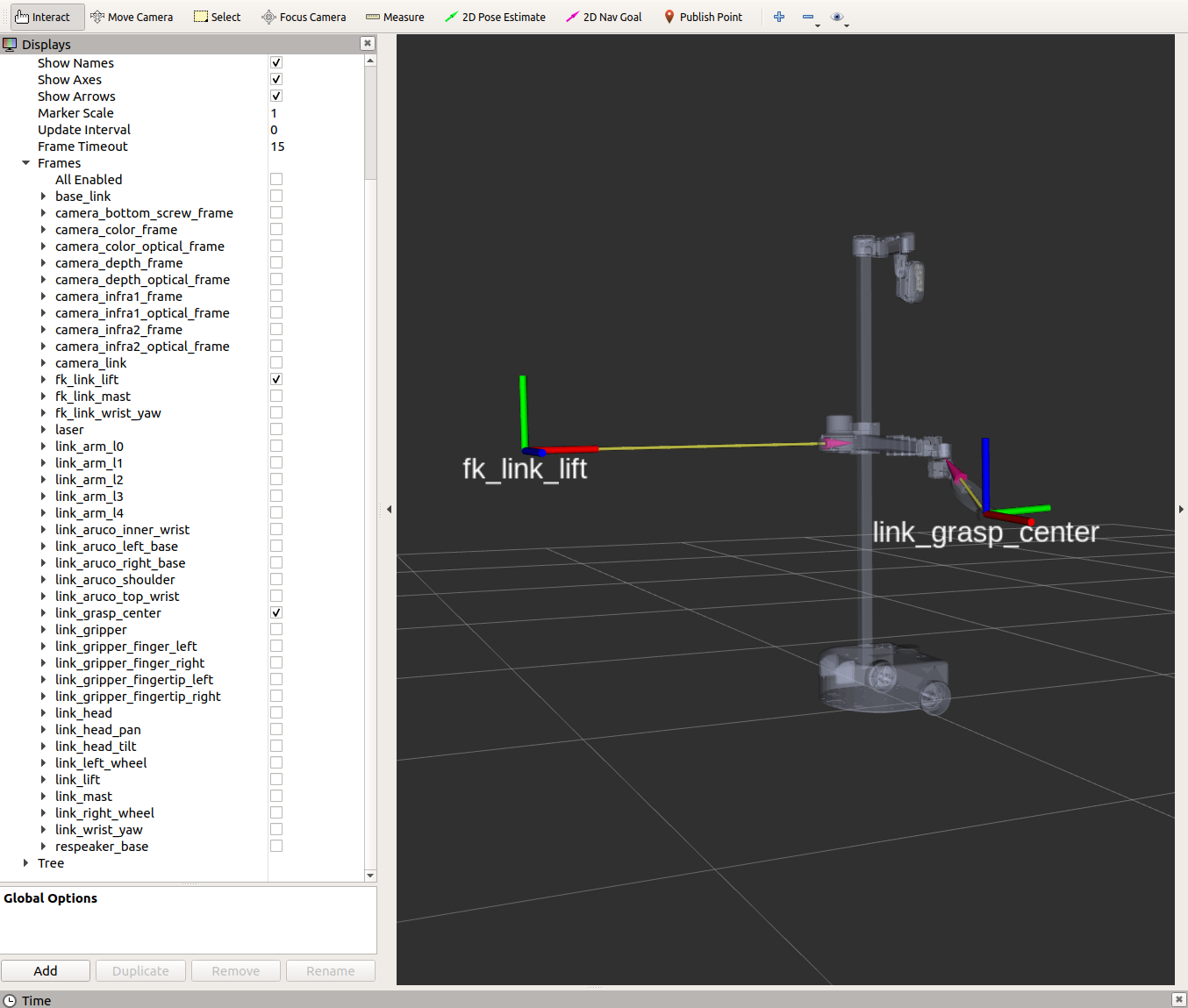

+## Perception Introduction

+

+The Stretch robot is equipped with the [Intel RealSense D435i camera](https://www.intelrealsense.com/depth-camera-d435i/), an essential component that allows the robot to measure and analyze the world around it. In this tutorial, we are going to showcase how to visualize the various topics published by the camera.

+

+Begin by running the stretch `driver.launch.py` file.

+

+```{.bash .shell-prompt}

+ros2 launch stretch_core stretch_driver.launch.py

+```

+

+To activate the [RealSense camera](https://www.intelrealsense.com/depth-camera-d435i/) and publish topics to be visualized, run the following launch file in a new terminal.

+

+```{.bash .shell-prompt}

+ros2 launch stretch_core d435i_low_resolution.launch.py

+```

+

+Within this tutorial package, there is an [RViz config file](https://github.com/hello-robot/stretch_tutorials/blob/noetic/rviz/perception_example.rviz) with the topics for perception already in the Display tree. You can visualize these topics and the robot model by running the command below in a new terminal.

+

+```{.bash .shell-prompt}

+ros2 run rviz2 rviz2 -d /home/hello-robot/ament_ws/src/stretch_tutorials/rviz/perception_example.rviz

+```

+

+### PointCloud2 Display

+

+A list of displays on the left side of the interface can visualize the camera data. Each display has its properties and status that notify a user if topic messages are received.

+

+For the `PointCloud2` display, a [sensor_msgs/pointCloud2](http://docs.ros.org/en/lunar/api/sensor_msgs/html/msg/PointCloud2.html) message named `/camera/depth/color/points` is received and the GIF below demonstrates the various display properties when visualizing the data.

+

+

+  +

+

+

+### Image Display

+The `Image` display when toggled creates a new rendering window that visualizes a [sensor_msgs/Image](http://docs.ros.org/en/lunar/api/sensor_msgs/html/msg/Image.html) messaged, */camera/color/image_raw*. This feature shows the image data from the camera; however, the image comes out sideways.

+

+

+  +

+

+

+### DepthCloud Display

+The `DepthCloud` display is visualized in the main RViz window. This display takes in the depth image and RGB image provided by RealSense to visualize and register a point cloud.

+

+

+  +

+

+

+## Deep Perception

+Hello Robot also has a ROS package that uses deep learning models for various detection demos. A link to the tutorials is provided: [stretch_deep_perception](https://docs.hello-robot.com/0.2/stretch-tutorials/ros2/deep_perception/).

diff --git a/stretch_body/tutorial_dynamixel_servos.md b/stretch_body/tutorial_dynamixel_servos.md

index 899f796..d6c8224 100644

--- a/stretch_body/tutorial_dynamixel_servos.md

+++ b/stretch_body/tutorial_dynamixel_servos.md

@@ -32,7 +32,7 @@ REx_dynamixel_jog.py /dev/hello-dynamixel-head 11

Output:

```{.bash .no-copy}

-[Dynamixel ID:011] ping Succeeded. Dynamixel model number : 1080

+[Dynamixel ID:011] ping Succeeded. Dynamixel model number : 1080. Baud 115200

------ MENU -------

m: menu

a: increment position 50 tick

@@ -52,6 +52,10 @@ t: set max temp

i: set id

d: disable torque

e: enable torque

+x: put in multi-turn mode

+y: put in position mode

+w: put in pwm mode

+f: put in vel mode

-------------------

```

@@ -67,12 +71,14 @@ Output:

```{.bash .no-copy}

For use with S T R E T C H (TM) RESEARCH EDITION from Hello Robot Inc.

----- Rebooting Head ----

+Rebooting: head_pan

[Dynamixel ID:011] Reboot Succeeded.

+Rebooting: head_tilt

[Dynamixel ID:012] Reboot Succeeded.

----- Rebooting Wrist ----

-[Dynamixel ID:013] Reboot Succeeded.

+Rebooting: stretch_gripper

[Dynamixel ID:014] Reboot Succeeded.

+Rebooting: wrist_yaw

+[Dynamixel ID:013] Reboot Succeeded.

```

### Identify Servos on the Bus

@@ -80,38 +86,42 @@ For use with S T R E T C H (TM) RESEARCH EDITION from Hello Robot Inc.

If it is unclear which servos are on the bus, and at what baud rate, you can use the `REx_dynamixel_id_scan.py` tool. Here we see that the two head servos are at ID `11` and `12` at baud `57600`.

```{.bash .shell-prompt}

-REx_dynamixel_id_scan.py /dev/hello-dynamixel-head --baud 57600

+REx_dynamixel_id_scan.py /dev/hello-dynamixel-head

```

Output:

```{.bash .no-copy}

Scanning bus /dev/hello-dynamixel-head at baud rate 57600

----------------------------------------------------------

-[Dynamixel ID:000] ping Failed.

-[Dynamixel ID:001] ping Failed.

-[Dynamixel ID:002] ping Failed.

-[Dynamixel ID:003] ping Failed.

-[Dynamixel ID:004] ping Failed.

-[Dynamixel ID:005] ping Failed.

-[Dynamixel ID:006] ping Failed.

-[Dynamixel ID:007] ping Failed.

-[Dynamixel ID:008] ping Failed.

-[Dynamixel ID:009] ping Failed.

-[Dynamixel ID:010] ping Failed.

-[Dynamixel ID:011] ping Succeeded. Dynamixel model number : 1080

-[Dynamixel ID:012] ping Succeeded. Dynamixel model number : 1060

-[Dynamixel ID:013] ping Failed.

-[Dynamixel ID:014] ping Failed.

-[Dynamixel ID:015] ping Failed.

-[Dynamixel ID:016] ping Failed.

-[Dynamixel ID:017] ping Failed.

-[Dynamixel ID:018] ping Failed.

-[Dynamixel ID:019] ping Failed.

-[Dynamixel ID:020] ping Failed.

-[Dynamixel ID:021] ping Failed.

-[Dynamixel ID:022] ping Failed.

-[Dynamixel ID:023] ping Failed.

-[Dynamixel ID:024] ping Failed.

+Scanning bus /dev/hello-dynamixel-head

+Checking ID 0

+Checking ID 1

+Checking ID 2

+Checking ID 3

+Checking ID 4

+Checking ID 5

+Checking ID 6

+Checking ID 7

+Checking ID 8

+Checking ID 9

+Checking ID 10

+Checking ID 11

+[Dynamixel ID:011] ping Succeeded. Dynamixel model number : 1080. Baud 115200

+Checking ID 12

+[Dynamixel ID:012] ping Succeeded. Dynamixel model number : 1060. Baud 115200

+Checking ID 13

+Checking ID 14

+Checking ID 15

+Checking ID 16

+Checking ID 17

+Checking ID 18

+Checking ID 19

+Checking ID 20

+Checking ID 21

+Checking ID 22

+Checking ID 23

+Checking ID 24

+

```

### Setting the Servo Baud Rate

@@ -125,10 +135,8 @@ REx_dynamixel_set_baud.py /dev/hello-dynamixel-wrist 13 115200

Output:

```{.bash .no-copy}

---------------------

-Checking servo current baud for 57600

-----

-Identified current baud of 57600. Changing baud to 115200

-Success at changing baud

+

+Success at changing baud. Current baud is 115200 for servo 13 on bus /dev/hello-dynamixel-wrist

```

!!! note

@@ -139,15 +147,15 @@ Success at changing baud

Dynamixel servos come with `ID=1` from the factory. When adding your servos to the end-of-arm tool, you may want to set the servo ID using the `REx_dynamixel_id_change.py` tool. For example:

```{.bash .shell-prompt}

-REx_dynamixel_id_change.py /dev/hello-dynamixel-wrist 1 13 --baud 115200

+REx_dynamixel_id_change.py /dev/hello-dynamixel-wrist 1 13

```

Output:

```{.bash .no-copy}

-[Dynamixel ID:001] ping Succeeded. Dynamixel model number : 1080

+[Dynamixel ID:001] ping Succeeded. Dynamixel model number : 1080. Baud 115200

Ready to change ID 1 to 13. Hit enter to continue:

-[Dynamixel ID:013] ping Succeeded. Dynamixel model number : 1080

+[Dynamixel ID:013] ping Succeeded. Dynamixel model number : 1080. Baud 115200

Success at setting ID to 13

```

diff --git a/stretch_body/tutorial_introduction.md b/stretch_body/tutorial_introduction.md

index e257191..be14641 100644

--- a/stretch_body/tutorial_introduction.md

+++ b/stretch_body/tutorial_introduction.md

@@ -95,24 +95,35 @@ Parameters may be named with a suffix to help describe the unit type. For exampl

### The Robot Status

-The Robot derives from the [Device class](https://github.com/hello-robot/stretch_body/blob/master/body/stretch_body/device.py). It also encapsulates several other Devices:

+The Robot derives from the [Device class](https://github.com/hello-robot/stretch_body/blob/master/body/stretch_body/device.py) and we have subclasses that derives from this Device class such as the [Prismatic Joint](https://github.com/hello-robot/stretch_body/blob/master/body/stretch_body/prismatic_joint.py) and the [Dynamixel XL460](https://github.com/hello-robot/stretch_body/blob/master/body/stretch_body/dynamixel_hello_XL430.py). It also encapsulates several other Devices:

-* [robot.head](https://github.com/hello-robot/stretch_body/blob/master/body/stretch_body/head.py)

-* [robot.arm](https://github.com/hello-robot/stretch_body/blob/master/body/stretch_body/arm.py)

-* [robot.lift](https://github.com/hello-robot/stretch_body/blob/master/body/stretch_body/lift.py)

+**Device**

* [robot.base](https://github.com/hello-robot/stretch_body/blob/master/body/stretch_body/base.py)

* [robot.wacc](https://github.com/hello-robot/stretch_body/blob/master/body/stretch_body/wacc.py)

* [robot.pimu](https://github.com/hello-robot/stretch_body/blob/master/body/stretch_body/pimu.py)

+

+**Prismatic Joint**

+* [robot.arm](https://github.com/hello-robot/stretch_body/blob/master/body/stretch_body/arm.py)

+* [robot.lift](https://github.com/hello-robot/stretch_body/blob/master/body/stretch_body/lift.py)

+

+**Dynamixel XL460**

+* [robot.head](https://github.com/hello-robot/stretch_body/blob/master/body/stretch_body/head.py)

* [robot.end_of_arm](https://github.com/hello-robot/stretch_body/blob/master/body/stretch_body/end_of_arm.py)

All devices contain a Status dictionary. The Status contains the most recent sensor and state data of that device. For example, looking at the Arm class we see:

```python

-class Arm(Device):

- def __init__(self):

+class Arm(PrismaticJoint):

+ def __init__(self,usb=None):

+```

+As we can see the arm class is part of the PrismaticJoint class but this is also part of the Device class as we can see here:

+

+```python

+class PrismaticJoint(Device):

+ def __init__(self,name,usb=None):

...

- self.status = {'pos': 0.0, 'vel': 0.0, 'force':0.0, \

- 'motor':self.motor.status,'timestamp_pc':0}

+ self.status = {'timestamp_pc':0,'pos':0.0, 'vel':0.0, \

+ 'force':0.0,'motor':self.motor.status}

```

The Status dictionaries are automatically updated by a background thread of the Robot class at around 25Hz. The Status data can be accessed via the Robot class as below:

@@ -189,4 +200,4 @@ robot.stop()

The Dynamixel servos do not use the Hello Robot communication protocol. As such, the head, wrist, and gripper will move immediately upon issuing a motion command.

------

- All materials are Copyright 2022 by Hello Robot Inc. Hello Robot and Stretch are registered trademarks.

\ No newline at end of file

+ All materials are Copyright 2022 by Hello Robot Inc. Hello Robot and Stretch are registered trademarks.

diff --git a/stretch_body/tutorial_parameter_management.md b/stretch_body/tutorial_parameter_management.md

index 05f87f6..01ec140 100644

--- a/stretch_body/tutorial_parameter_management.md

+++ b/stretch_body/tutorial_parameter_management.md

@@ -15,7 +15,9 @@ a.params

```{.python .no-copy}

Out[7]:

-{'chain_pitch': 0.0167,

+{'usb_name': '/dev/hello-motor-arm',

+ 'force_N_per_A': 55.9

+ 'chain_pitch': 0.0167,

'chain_sprocket_teeth': 10,

'gr_spur': 3.875,

'i_feedforward': 0,

@@ -42,7 +44,9 @@ a.robot_params['lift']

```{.python .no-copy}

Out[9]:

-{'calibration_range_bounds': [1.094, 1.106],

+{'usb_name': '/dev/hello-motor-lift',

+ 'force_N_per_A': 75.0

+ 'calibration_range_bounds': [1.094, 1.106],

'contact_model': 'effort_pct',

'contact_model_homing': 'effort_pct',

'contact_models': {'effort_pct': {'contact_thresh_calibration_margin': 10.0,

@@ -149,4 +153,4 @@ robot.write_user_param_to_YAML('base.wheel_separation_m', d_avg)

This will update the file `stretch_user_params.yaml`.

------

- All materials are Copyright 2022 by Hello Robot Inc. Hello Robot and Stretch are registered trademarks.

\ No newline at end of file

+ All materials are Copyright 2022 by Hello Robot Inc. Hello Robot and Stretch are registered trademarks.

diff --git a/stretch_body/tutorial_robot_motion.md b/stretch_body/tutorial_robot_motion.md

index ea46850..3c1cf25 100644

--- a/stretch_body/tutorial_robot_motion.md

+++ b/stretch_body/tutorial_robot_motion.md

@@ -4,13 +4,14 @@ As we've seen in previous tutorials, commanding robot motion is simple and strai

```python linenums="1"

import stretch_body.robot

+import time

robot=stretch_body.robot.Robot()

robot.startup()

robot.arm.move_by(0.1)

robot.push_command()

time.sleep(2.0)

-

+

robot.stop()

```

@@ -18,13 +19,14 @@ The absolute motion can be commanded by:

```python linenums="1"

import stretch_body.robot

+import time

robot=stretch_body.robot.Robot()

robot.startup()

robot.arm.move_to(0.1)

robot.push_command()

time.sleep(2.0)

-

+

robot.stop()

```

@@ -34,6 +36,7 @@ In the above examples, we executed a `time.sleep()` after `robot.push_command()`

```python linenums="1"

import stretch_body.robot

+import time

robot=stretch_body.robot.Robot()

robot.startup()

@@ -50,7 +53,7 @@ robot.push_command()

time.sleep(2.0)

robot.arm.move_to(0.0)

robot.arm.wait_until_at_setpoint()

-

+

robot.stop()

```

@@ -118,7 +121,7 @@ robot.arm.wait_until_at_setpoint()

robot.arm.move_to(0.5)

robot.push_command()

robot.arm.wait_until_at_setpoint()

-

+

robot.stop()

```

@@ -130,6 +133,7 @@ As we see here, the `robot.push_command()` call is not required as the motion be

```python

import stretch_body.robot

+import time

from stretch_body.hello_utils import deg_to_rad

robot=stretch_body.robot.Robot()

@@ -144,7 +148,7 @@ robot.head.move_to('head_pan',deg_to_rad(90.0))

robot.head.move_to('head_tilt',deg_to_rad(45.0))

time.sleep(3.0)

-

+

robot.stop()

```

@@ -152,6 +156,7 @@ Similar to the stepper joints, the Dynamixel joints accept motion profile and mo

```python

import stretch_body.robot

+import time

robot=stretch_body.robot.Robot()

robot.startup()

@@ -172,7 +177,7 @@ a = robot.params['head_pan']['motion']['slow']['accel']

robot.head.move_to('head_pan',deg_to_rad(90.0),v_r=v, a_r=a)

time.sleep(3.0)

-

+

robot.stop()

```

diff --git a/stretch_body/tutorial_splined_trajectories.md b/stretch_body/tutorial_splined_trajectories.md

index dc54315..1e02bd2 100644

--- a/stretch_body/tutorial_splined_trajectories.md

+++ b/stretch_body/tutorial_splined_trajectories.md

@@ -61,6 +61,7 @@ Programming a splined trajectory is straightforward. For example, try the follow

import stretch_body.robot

r=stretch_body.robot.Robot()

r.startup()

+#r.arm.motor.disable_sync_mode() **If you want to try running the code with this command you'll need to coment the r.push_command() and it will work as well

#Define the waypoints

times = [0.0, 10.0, 20.0]

@@ -73,6 +74,8 @@ for waypoint in zip(times, positions, velocities):

#Begin execution

r.arm.follow_trajectory()

+r.push_command()

+time.sleep(0.1)

#Wait unti completion

while r.arm.is_trajectory_active():

@@ -87,7 +90,7 @@ This will cause the arm to move from its current position to 0.45m, then back to

* This will execute a Cubic spline as we did not pass in accelerations to in `r.arm.trajectory.add`

* The call to `r.arm.follow_trajectory` is non-blocking and the trajectory generation is handled by a background thread of the Robot class

-If you're interested in exploring the trajectory API further the [code for the `stretch_trajectory_jog.py`](https://github.com/hello-robot/stretch_body/blob/master/tools/bin/stretch_trajectory_jog.py) is a great reference to get started.

+If you're interested in exploring the trajectory API further. the [code for the `stretch_trajectory_jog.py`](https://github.com/hello-robot/stretch_body/blob/master/tools/bin/stretch_trajectory_jog.py) is a great reference to get started.

## Advanced: Controller Parameters

diff --git a/stretch_body/tutorial_stretch_body_api.md b/stretch_body/tutorial_stretch_body_api.md

deleted file mode 100644

index 89a7b73..0000000

--- a/stretch_body/tutorial_stretch_body_api.md

+++ /dev/null

@@ -1,448 +0,0 @@

-# Stretch Body API Reference

-

-Stretch Body is the Python interface for working with Stretch. This page serves as a reference for the interfaces defined in the `stretch_body` library.

-See the [Stretch Body Tutorials](https://docs.hello-robot.com/0.2/stretch-tutorials/stretch_body/) for additional information on working with this library.

-

-## The Robot Class

-

-The most common interface to Stretch is the [Robot](#stretch_body.robot.Robot) class. This class encapsulates all devices on the robot. It is typically initialized as:

-

-```python linenums='1'

-import stretch_body.robot

-

-r = stretch_body.robot.Robot()

-if not r.startup():

- exit() # failed to start robot!

-

-# home the joints to find zero, if necessary

-if not r.is_calibrated():

- r.home()

-

-# interact with the robot here

-```

-

-The [`startup()`](#stretch_body.robot.Robot.startup) and [`home()`](#stretch_body.robot.Robot.home) methods start communication with and home each of the robot's devices, respectively. Through the [Robot](#stretch_body.robot.Robot) class, users can interact with all devices on the robot. For example, continuing the example above:

-

-```python linenums='12'

-# moving joints on the robot

-r.arm.pretty_print()

-r.lift.pretty_print()

-r.base.pretty_print()

-r.head.pretty_print()

-r.end_of_arm.pretty_print()

-

-# other devices on the robot

-r.wacc.pretty_print()

-r.pimu.pretty_print()

-

-r.stop()

-```

-

-Each of these devices is defined in separate modules within `stretch_body`. In the following section, we'll look at the API of these classes. The [`stop()`](#stretch_body.robot.Robot.stop) method shuts down communication with the robot's devices.

-

-All methods in the [Robot](#stretch_body.robot.Robot) class are documented below.

-

-::: stretch_body.robot.Robot

-

-## The Device Class

-

-The `stretch_body` library is modular in design. Each subcomponent of Stretch is defined in its class and the [Robot class](#the-robot-class) provides an interface that ties all of these classes together. This modularity allows users to plug in new/modified subcomponents into the [Robot](#stretch_body.robot.Robot) interface by extending the Device class.

-

-It is possible to interface with a single subcomponent of Stretch by initializing its device class directly. In this section, we'll look at the API of seven subclasses of the Device class: the [Arm](#stretch_body.arm.Arm), [Lift](#stretch_body.lift.Lift), [Base](#stretch_body.base.Base), [Head](#stretch_body.head.Head), [EndOfArm](#stretch_body.end_of_arm.EndOfArm), [Wacc](#stretch_body.wacc.Wacc), and [Pimu](#stretch_body.pimu.Pimu) subcomponents of Stretch.

-

-### Using the Arm class

-

-

-

-

-The interface to Stretch's telescoping arm is the [Arm](#stretch_body.arm.Arm) class. It is typically initialized as:

-

-```python linenums='1'

-import stretch_body.arm

-

-a = stretch_body.arm.Arm()

-a.motor.disable_sync_mode()

-if not a.startup():

- exit() # failed to start arm!

-

-a.home()

-

-# interact with the arm here

-```

-

-Since both [Arm](#stretch_body.arm.Arm) and [Robot](#stretch_body.robot.Robot) are subclasses of the [Device](#stretch_body.device.Device) class, the same [`startup()`](#stretch_body.arm.Arm.startup) and [`stop()`](#stretch_body.arm.Arm.stop) methods are available here, as well as other [Device](#stretch_body.device.Device) methods such as [`home()`](#stretch_body.arm.Arm.home). Using the [Arm](#stretch_body.arm.Arm) class, we can read the arm's current state and send commands to the joint. For example, continuing the example above:

-

-```python linenums='11'

-starting_position = a.status['pos']

-

-# move out by 10cm

-a.move_to(starting_position + 0.1)

-a.push_command()

-a.motor.wait_until_at_setpoint()

-

-# move back to starting position quickly

-a.move_to(starting_position, v_m=0.2, a_m=0.25)

-a.push_command()

-a.motor.wait_until_at_setpoint()

-

-a.move_by(0.1) # move out by 10cm

-a.push_command()

-a.motor.wait_until_at_setpoint()

-```

-

-The [`move_to()`](#stretch_body.arm.Arm.move_to) and [`move_by()`](#stretch_body.arm.Arm.move_by) methods queue absolute and relative position commands to the arm, respectively, while the nonblocking [`push_command()`](#stretch_body.arm.Arm.push_command) method pushes the queued position commands to the hardware for execution.

-

-The attribute `motor`, an instance of the [Stepper](#stretch_body.stepper.Stepper) class, has the method [`wait_until_at_setpoint()`](#stretch_body.stepper.Stepper.wait_until_at_setpoint) which blocks program execution until the joint reaches the commanded goal. With [firmware P1 or greater](https://github.com/hello-robot/stretch_firmware/blob/master/tutorials/docs/updating_firmware.md) installed, it is also possible to queue a waypoint trajectory for the arm to follow:

-

-```python linenums='26'

-starting_position = a.status['pos']

-

-# queue a trajectory consisting of four waypoints

-a.trajectory.add(t_s=0, x_m=starting_position)

-a.trajectory.add(t_s=3, x_m=0.15)

-a.trajectory.add(t_s=6, x_m=0.1)

-a.trajectory.add(t_s=9, x_m=0.2)

-

-# trigger trajectory execution

-a.follow_trajectory()

-import time; time.sleep(9)

-```

-

-The attribute `trajectory`, an instance of the [PrismaticTrajectory](#stretch_body.trajectories.PrismaticTrajectory) class, has the method [`add()`](#stretch_body.trajectories.PrismaticTrajectory.add) which adds a single waypoint in a linear sliding trajectory. For a well-formed `trajectory` (see [`is_valid()`](#stretch_body.trajectories.Spline.is_valid)), the [`follow_trajectory()`](#stretch_body.arm.Arm.follow_trajectory) method starts tracking the trajectory for the telescoping arm.

-

-It is also possible to dynamically restrict the arm joint range:

-

-```python linenums='37'

-range_upper_limit = 0.3 # meters

-

-# set soft limits on arm's range

-a.set_soft_motion_limit_min(0)

-a.set_soft_motion_limit_max(range_upper_limit)

-a.push_command()

-

-# command the arm outside the valid range

-a.move_to(0.4)

-a.push_command()

-a.motor.wait_until_at_setpoint()

-print(a.status['pos']) # we should expect to see ~0.3

-

-a.stop()

-```

-

-The [`set_soft_motion_limit_min/max()`](#stretch_body.arm.Arm.set_soft_motion_limit_min) methods form the basis of an experimental [self-collision avoidance](https://github.com/hello-robot/stretch_body/blob/master/body/stretch_body/robot_collision.py#L47) system built into Stretch Body.

-

-All methods in the [Arm class](#stretch_body.arm.Arm) are documented below.

-

-::: stretch_body.arm.Arm

-

-### Using the Lift class

-

-

-

-

-The interface to Stretch's lift is the [Lift](#stretch_body.lift.Lift) class. It is typically initialized as:

-

-```python linenums='1'

-import stretch_body.lift

-

-l = stretch_body.lift.Lift()

-l.motor.disable_sync_mode()

-if not l.startup():

- exit() # failed to start lift!

-

-l.home()

-

-# interact with the lift here

-```

-

-The [`startup()`](#stretch_body.lift.Lift.startup) and [`home()`](#stretch_body.lift.Lift.home) methods are extended from the [Device](#stretch_body.device.Device) class. Reading the lift's current state and sending commands to the joint occurs similarly to the [Arm](#stretch_body.arm.Arm) class:

-

-```python linenums='11'

-starting_position = l.status['pos']

-

-# move up by 10cm

-l.move_to(starting_position + 0.1)

-l.push_command()

-l.motor.wait_until_at_setpoint()

-```

-

-The attribute `status` is a dictionary of the joint's current status. This state information is updated in the background in real-time by default (disable by initializing as [`startup(threading=False)`](#stretch_body.lift.Lift.startup)). Use the [`pretty_print()`](#stretch_body.lift.Lift.pretty_print) method to print out this state info in a human-interpretable format. Setting up waypoint trajectories for the lift is also similar to the [Arm](#stretch_body.arm.Arm):

-

-```python linenums='17'

-starting_position = l.status['pos']

-

-# queue a trajectory consisting of three waypoints

-l.trajectory.add(t_s=0, x_m=starting_position, v_m=0.0)

-l.trajectory.add(t_s=3, x_m=0.5, v_m=0.0)

-l.trajectory.add(t_s=6, x_m=0.6, v_m=0.0)

-

-# trigger trajectory execution

-l.follow_trajectory()

-import time; time.sleep(6)

-```

-

-The attribute `trajectory` is also an instance of the [PrismaticTrajectory](#stretch_body.trajectories.PrismaticTrajectory) class, and by providing the instantaneous velocity argument `v_m` to the [`add()`](#stretch_body.trajectories.PrismaticTrajectory.add) method, a cubic spline can be loaded into the joint's `trajectory`. The call to [`follow_trajectory()`](#stretch_body.lift.Lift.follow_trajectory) begins hardware tracking of the spline.

-

-Finally, setting soft motion limits for the lift's range can be done using:

-

-```python linenums='27'

-# cut out 0.2m from the top and bottom of the lift's range

-l.set_soft_motion_limit_min(0.2)

-l.set_soft_motion_limit_max(0.8)

-l.push_command()

-

-l.stop()

-```

-

-The [`set_soft_motion_limit_min/max()`](#stretch_body.lift.Lift.set_soft_motion_limit_min) methods perform clipping of the joint's range at the firmware level (can persist across reboots).

-

-All methods in the [Lift](#stretch_body.lift.Lift) class are documented below.

-

-::: stretch_body.lift.Lift

-

-### Using the Base class

-

-

-

-

-| | Item | Notes |

-| ------- | -------------- | --------------------------------------------------------- |

-| A | Drive wheels | 4 inch diameter, urethane rubber shore 60A |

-| B | Cliff sensors | Sharp GP2Y0A51SK0F, Analog, range 2-15 cm |

-| C | Mecanum wheel | Diameter 50mm |

-

-The interface to Stretch's mobile base is the [Base](#stretch_body.base.Base) class. It is typically initialized as:

-

-```python linenums='1'

-import stretch_body.base

-

-b = stretch_body.base.Base()

-b.left_wheel.disable_sync_mode()

-b.right_wheel.disable_sync_mode()

-if not b.startup():

- exit() # failed to start base!

-

-# interact with the base here

-```

-

-Stretch's mobile base is a differential drive configuration. The left and right wheels are accessible through [Base](#stretch_body.base.Base) `left_wheel` and `right_wheel` attributes, both of which are instances of the [Stepper](#stretch_body.stepper.Stepper) class. The [`startup()`](#stretch_body.base.Base.startup) method is extended from the [Device](#stretch_body.device.Device) class. Since the mobile base is unconstrained, there is no homing method. The [`pretty_print()`](#stretch_body.base.Base.pretty_print) method prints out mobile base state information in a human-interpretable format. We can read the base's current state and send commands using:

-

-```python linenums='10'

-b.pretty_print()

-

-# translate forward by 10cm

-b.translate_by(0.1)

-b.push_command()

-b.left_wheel.wait_until_at_setpoint()

-

-# rotate counter-clockwise by 90 degrees

-b.rotate_by(1.57)

-b.push_command()

-b.left_wheel.wait_until_at_setpoint()

-```

-

-The [`translate_by()`](#stretch_body.base.Base.translate_by) and [`rotate_by()`](#stretch_body.base.Base.rotate_by) methods send relative commands similar to the way [`move_by()`](#stretch_body.lift.Lift.move_by) behaves for the single degree of freedom joints.

-

-The mobile base also supports velocity control:

-

-```python linenums='21'

-# command the base to translate forward at 5cm / second

-b.set_translate_velocity(0.05)

-b.push_command()

-import time; time.sleep(1)

-

-# command the base to rotate counter-clockwise at 0.1rad / second

-b.set_rotational_velocity(0.1)

-b.push_command()

-time.sleep(1)

-

-# command the base with translational and rotational velocities

-b.set_velocity(0.05, 0.1)

-b.push_command()

-time.sleep(1)

-

-# stop base motion

-b.enable_freewheel_mode()

-b.push_command()

-```

-

-The [`set_translate_velocity()`](#stretch_body.base.Base.set_translate_velocity) and [`set_rotational_velocity()`](#stretch_body.base.Base.set_rotational_velocity) methods give velocity control over the translational and rotational components of the mobile base independently. The [`set_velocity()`](#stretch_body.base.Base.set_velocity) method gives control over both of these components simultaneously.

-

-To halt motion, you can command zero velocities or command the base into freewheel mode using [`enable_freewheel_mode()`](#stretch_body.base.Base.enable_freewheel_mode). The mobile base also supports waypoint trajectory following, but the waypoints are part of the SE2 group, where a desired waypoint is defined as an (x, y) point and a theta orientation:

-

-```python linenums='39'

-# reset odometry calculation

-b.first_step = True

-b.pull_status()

-

-# queue a trajectory consisting of three waypoints

-b.trajectory.add(time=0, x=0.0, y=0.0, theta=0.0)

-b.trajectory.add(time=3, x=0.1, y=0.0, theta=0.0)

-b.trajectory.add(time=6, x=0.0, y=0.0, theta=0.0)

-

-# trigger trajectory execution

-b.follow_trajectory()

-import time; time.sleep(6)

-print(b.status['x'], b.status['y'], b.status['theta']) # we should expect to see around (0.0, 0.0, 0.0 or 6.28)

-

-b.stop()

-```

-

-!!! warning

- The [Base](#stretch_body.base.Base) waypoint trajectory following has no notion of obstacles in the environment. It will blindly follow the commanded waypoints. For obstacle avoidance, we recommend employing perception and a path planner.

-

-The attribute `trajectory` is an instance of the [DiffDriveTrajectory](#stretch_body.trajectories.DiffDriveTrajectory) class. The call to [`follow_trajectory()`](#stretch_body.base.Base.follow_trajectory) begins hardware tracking of the spline.

-

-All methods of the [Base](#stretch_body.base.Base) class are documented below.

-

-::: stretch_body.base.Base

-

-### Using the Head class

-

-

-

-

-The interface to Stretch's head is the [Head](#stretch_body.head.Head) class. The head contains an Intel Realsense D435i depth camera. The pan and tilt joints in the head allow Stretch to swivel and capture depth imagery of its surrounding. The head is typically initialized as:

-

-```python linenums='1'

-import stretch_body.head

-

-h = stretch_body.head.Head()

-if not h.startup():

- exit() # failed to start head!

-

-h.home()

-

-# interact with the head here

-```

-

-[Head](#stretch_body.head.Head) is a subclass of the [DynamixelXChain](#stretch_body.dynamixel_X_chain.DynamixelXChain) class, which in turn is a subclass of the [Device](#stretch_body.device.Device) class. Therefore, some of [Head's](#stretch_body.head.Head) methods, such as [`startup()`](#stretch_body.head.Head.startup) and [`home()`](#stretch_body.head.Head.home) are extended from the [Device](#stretch_body.device.Device) class, while others come from the [DynamixelXChain](#stretch_body.dynamixel_X_chain.DynamixelXChain) class. Reading the head's current state and sending commands to its revolute joints (head pan and tilt) can be achieved using:

-

-```python linenums='10'

-starting_position = h.status['head_pan']['pos']

-

-# look right by 90 degrees

-h.move_to('head_pan', starting_position + 1.57)

-h.get_joint('head_pan').wait_until_at_setpoint()

-

-# tilt up by 30 degrees

-h.move_by('head_tilt', -1.57 / 3)

-h.get_joint('head_tilt').wait_until_at_setpoint()

-

-# look down towards the wheels

-h.pose('wheels')

-import time; time.sleep(3)

-

-# look ahead

-h.pose('ahead')

-time.sleep(3)

-```

-

-The attribute `status` is a dictionary of dictionaries, where each subdictionary is the status of one of the head's joints. This state information is updated in the background in real-time by default (disable by initializing as [`startup(threading=False)`](#stretch_body.head.Head.startup)). Use the [`pretty_print()`](#stretch_body.head.Head.pretty_print) method to print out this state information in a human-interpretable format.

-

-Commanding the head's revolute joints is done through the [`move_to()`](#stretch_body.head.Head.move_to) and [`move_by()`](#stretch_body.head.Head.move_by) methods. Notice that, unlike the previous joints, no push command call is required here. These joints are Dynamixel servos, which behave differently than the Hello Robot steppers. Their commands are not queued and are executed as soon as they're received.

-

-[Head's](#stretch_body.head.Head) two joints, the 'head_pan' and 'head_tilt' are instances of the [DynamixelHelloXL430](#stretch_body.dynamixel_hello_XL430.DynamixelHelloXL430) class and are retrievable using the [`get_joint()`](#stretch_body.head.Head.get_joint) method. They have the [`wait_until_at_setpoint()`](#stretch_body.dynamixel_hello_XL430.DynamixelHelloXL430.wait_until_at_setpoint) method, which blocks program execution until the joint reaches the commanded goal.

-

-The [`pose()`](#stretch_body.head.Head.pose) method makes it easy to command the head to common head poses (e.g. looking 'ahead', at the end-of-arm 'tool', obstacles in front of the 'wheels', or 'up').

-

-The head supports waypoint trajectories as well:

-

-```python linenums='27'

-# queue a trajectory consisting of three waypoints

-h.get_joint('head_tilt').trajectory.add(t_s=0, x_r=0.0)

-h.get_joint('head_tilt').trajectory.add(t_s=3, x_r=-1.0)

-h.get_joint('head_tilt').trajectory.add(t_s=6, x_r=0.0)

-h.get_joint('head_pan').trajectory.add(t_s=0, x_r=0.1)

-h.get_joint('head_pan').trajectory.add(t_s=3, x_r=-0.9)

-h.get_joint('head_pan').trajectory.add(t_s=6, x_r=0.1)

-

-# trigger trajectory execution

-h.follow_trajectory()

-import time; time.sleep(6)

-```

-

-The head pan and tilt [DynamixelHelloXL430](#stretch_body.dynamixel_hello_XL430.DynamixelHelloXL430) instances have an attribute `trajectory`, which is an instance of the [RevoluteTrajectory](#stretch_body.trajectories.RevoluteTrajectory) class. The call to [`follow_trajectory()`](#stretch_body.dynamixel_X_chain.DynamixelXChain.follow_trajectory) begins software tracking of the spline.

-

-Finally, setting soft motion limits for the head's pan and tilt range can be achieved using:

-

-```python linenums='38'

-# clip the head_pan's range

-h.get_joint('head_pan').set_soft_motion_limit_min(-1.0)

-h.get_joint('head_pan').set_soft_motion_limit_max(1.0)

-

-# clip the head_tilt's range

-h.get_joint('head_tilt').set_soft_motion_limit_min(-1.0)

-h.get_joint('head_tilt').set_soft_motion_limit_max(0.1)

-

-h.stop()

-```

-

-The [`set_soft_motion_limit_min/max()`](#stretch_body.dynamixel_X_chain.DynamixelXChain.set_soft_motion_limit_min) methods perform clipping of the joint's range at the software level (cannot persist across reboots).

-

-All methods of the [Head](#stretch_body.head.Head) class are documented below.

-

-::: stretch_body.head.Head

-

-### Using the EndOfArm class

-

-The interface to Stretch's end-of-arm is the [EndOfArm](#stretch_body.end_of_arm.EndOfArm) class. It is typically initialized as:

-

-```python linenums='1'

-import stretch_body.end_of_arm

-

-e = stretch_body.end_of_arm.EndOfArm()

-if not e.startup(threaded=True):

- exit() # failed to start end of arm!

-

-# interact with the end of arm here

-

-e.stop()

-```

-

-All methods of the [EndOfArm](#stretch_body.end_of_arm.EndOfArm) class are documented below.

-

-::: stretch_body.end_of_arm.EndOfArm

-

-### Using the Wacc class

-

-The interface to Stretch's wrist board is the [Wacc](#stretch_body.wacc.Wacc) (wrist + accelerometer) class. This board provides an Arduino and accelerometer sensor that is accessible from the [Wacc](#stretch_body.wacc.Wacc) class. It is typically initialized as:

-

-```python linenums='1'

-import stretch_body.wacc

-

-w = stretch_body.wacc.Wacc()

-if not w.startup(threaded=True):

- exit() # failed to start wacc!

-

-# interact with the wacc here

-

-w.stop()

-```

-

-All methods of the [Wacc](#stretch_body.wacc.Wacc) class are documented below.

-

-::: stretch_body.wacc.Wacc

-

-### Using the Pimu class

-

-The interface to Stretch's power board is the [Pimu](#stretch_body.pimu.Pimu) (power + IMU) class. This board provides a 9-DOF IMU that is accessible from the [Pimu](#stretch_body.pimu.Pimu) class. It is typically initialized as:

-

-```python linenums='1'

-import stretch_body.pimu

-

-p = stretch_body.pimu.Pimu()

-if not p.startup(threaded=True):

- exit() # failed to start pimu!

-

-# interact with the pimu here

-

-p.stop()

-```

-

-All methods of the [Pimu](#stretch_body.pimu.Pimu) class are documented below.

-

-::: stretch_body.pimu.Pimu

-

-------

- All materials are Copyright 2022 by Hello Robot Inc. Hello Robot and Stretch are registered trademarks.

\ No newline at end of file

+

+

+

+

+

+

+

+

+

+ +

+ +

+