Date: Tue, 12 Sep 2023 13:11:18 -0700

Subject: [PATCH 06/15] Update the md file with the newest version of code

---

ros2/example_6.md | 46 +++++++++++++++++++++++++++++-----------------

1 file changed, 29 insertions(+), 17 deletions(-)

diff --git a/ros2/example_6.md b/ros2/example_6.md

index da075fb..c14a14e 100644

--- a/ros2/example_6.md

+++ b/ros2/example_6.md

@@ -1,6 +1,6 @@

## Example 6

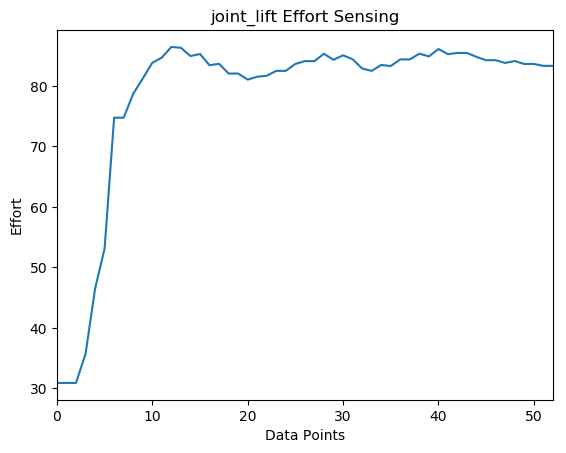

-In this example, we will review a Python script that prints and stores the effort values from a specified joint. If you are looking for a continuous print of the joint state efforts while Stretch is in action, then you can use the [rostopic command-line tool](https://docs.ros.org/en/iron/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Topics/Understanding-ROS2-Topics.html) shown in the [Internal State of Stretch Tutorial](https://github.com/hello-robot/stretch_tutorials/blob/master/ros2/internal_state_of_stretch.md).

+In this example, we will review a Python script that prints and stores the effort values from a specified joint. If you are looking for a continuous print of the joint state efforts while Stretch is in action, then you can use the [ros2 topic command-line tool](https://docs.ros.org/en/iron/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Topics/Understanding-ROS2-Topics.html) shown in the [Internal State of Stretch Tutorial](https://github.com/hello-robot/stretch_tutorials/blob/master/ros2/internal_state_of_stretch.md).

@@ -30,9 +30,11 @@ import rclpy

import hello_helpers.hello_misc as hm

import os

import csv

+import time

import pandas as pd

+import matplotlib

+matplotlib.use('tkagg')

import matplotlib.pyplot as plt

-import time

from matplotlib import animation

from datetime import datetime

from control_msgs.action import FollowJointTrajectory

@@ -46,6 +48,8 @@ class JointActuatorEffortSensor(hm.HelloNode):

self.joint_effort = []

self.save_path = '/home/hello-robot/ament_ws/src/stretch_tutorials/stored_data'

self.export_data = export_data

+ self.result = False

+ self.file_name = datetime.now().strftime("effort_sensing_tutorial_%Y%m%d%I")

def issue_command(self):

@@ -59,6 +63,8 @@ class JointActuatorEffortSensor(hm.HelloNode):

trajectory_goal.trajectory.header.stamp = self.get_clock().now().to_msg()

trajectory_goal.trajectory.header.frame_id = 'base_link'

+ while self.joint_state is None:

+ time.sleep(0.1)

self._send_goal_future = self.trajectory_client.send_goal_async(trajectory_goal, self.feedback_callback)

self.get_logger().info('Sent position goal = {0}'.format(trajectory_goal))

self._send_goal_future.add_done_callback(self.goal_response_callback)

@@ -75,8 +81,8 @@ class JointActuatorEffortSensor(hm.HelloNode):

self._get_result_future.add_done_callback(self.get_result_callback)

def get_result_callback(self, future):

- result = future.result().result

- self.get_logger().info('Sent position goal = {0}'.format(result))

+ self.result = future.result().result

+ self.get_logger().info('Sent position goal = {0}'.format(self.result))

def feedback_callback(self, feedback_msg):

if 'wrist_extension' in self.joints:

@@ -93,9 +99,9 @@ class JointActuatorEffortSensor(hm.HelloNode):

print("effort: " + str(current_effort))

else:

self.joint_effort.append(current_effort)

-

+

if self.export_data:

- file_name = datetime.now().strftime("%Y-%m-%d_%I-%p")

+ file_name = self.file_name

completeName = os.path.join(self.save_path, file_name)

with open(completeName, "w") as f:

writer = csv.writer(f)

@@ -103,8 +109,9 @@ class JointActuatorEffortSensor(hm.HelloNode):

writer.writerows(self.joint_effort)

def plot_data(self, animate = True):

- time.sleep(0.1)

- file_name = datetime.now().strftime("%Y-%m-%d_%I-%p")

+ while not self.result:

+ time.sleep(0.1)

+ file_name = self.file_name

self.completeName = os.path.join(self.save_path, file_name)

self.data = pd.read_csv(self.completeName)

self.y_anim = []

@@ -156,7 +163,6 @@ def main():

node.plot_data()

node.new_thread.join()

-

except KeyboardInterrupt:

node.get_logger().info('interrupt received, so shutting down')

node.destroy_node()

@@ -165,6 +171,7 @@ def main():

if __name__ == '__main__':

main()

+

```

### The Code Explained

@@ -181,9 +188,11 @@ import rclpy

import hello_helpers.hello_misc as hm

import os

import csv

+import time

import pandas as pd

+import matplotlib

+matplotlib.use('tkagg')

import matplotlib.pyplot as plt

-import time

from matplotlib import animation

from datetime import datetime

from control_msgs.action import FollowJointTrajectory

@@ -211,9 +220,11 @@ Create a list of the desired joints you want to print.

self.joint_effort = []

self.save_path = '/home/hello-robot/ament_ws/src/stretch_tutorials/stored_data'

self.export_data = export_data

+self.result = False

+self.file_name = datetime.now().strftime("effort_sensing_tutorial_%Y%m%d%I")

```

-Create an empty list to store the joint effort values. The `self.save_path` is the directory path where the .txt file of the effort values will be stored. You can change this path to a preferred directory. The `self.export_data` is a boolean and its default value is set to `True`. If set to `False`, then the joint values will be printed in the terminal, otherwise, it will be stored in a .txt file and that's what we want to see the plot graph.

+Create an empty list to store the joint effort values. The `self.save_path` is the directory path where the .txt file of the effort values will be stored. You can change this path to a preferred directory. The `self.export_data` is a boolean and its default value is set to `True`. If set to `False`, then the joint values will be printed in the terminal, otherwise, it will be stored in a .txt file and that's what we want to see the plot graph. Also we want to give our text file a name since the beginning and we use the `self.file_name` to access this later.

```python

self._send_goal_future = self.trajectory_client.send_goal_async(trajectory_goal, self.feedback_callback)

@@ -243,7 +254,7 @@ We need the goal_handle to request the result with the method get_result_async.

```python

def get_result_callback(self, future):

- result = future.result().result

+ self.result = future.result().result

self.get_logger().info('Sent position goal = {0}'.format(result))

```

In the result callback we log the result of our poistion goal

@@ -283,25 +294,26 @@ Use a conditional statement to print effort values in the terminal or store valu

```python

if self.export_data:

- file_name = datetime.now().strftime("%Y-%m-%d_%I-%p")

+ file_name = self.file_name

completeName = os.path.join(self.save_path, file_name)

with open(completeName, "w") as f:

writer = csv.writer(f)

writer.writerow(self.joints)

writer.writerows(self.joint_effort)

```

-A conditional statement is used to export the data to a .txt file. The file's name is set to the date and time the node was executed.

+A conditional statement is used to export the data to a .txt file. The file's name is set to the one we created at the beginning.

```python

def plot_data(self, animate = True):

- time.sleep(0.1)

- file_name = datetime.now().strftime("%Y-%m-%d_%I-%p")

+ while not self.result:

+ time.sleep(0.1)

+ file_name = self.file_name

self.completeName = os.path.join(self.save_path, file_name)

self.data = pd.read_csv(self.completeName)

self.y_anim = []

self.animate = animate

```

-This function will help us initialize some values to plot our data, the file is going to be the one we created and we need to create an empty list for the animation

+This function will help us initialize some values to plot our data, we need to wait until we get the results to start plotting, because the file could still be storing values and we want to plot every point also we need to create an empty list for the animation.

```python

for joint in self.data.columns:

From 216f8bc229b952bdfab9251ce9016430255fd08b Mon Sep 17 00:00:00 2001

From: hello-jesus

Date: Mon, 18 Sep 2023 11:57:20 -0700

Subject: [PATCH 07/15] Update links to Humble documentation

---

ros2/aruco_marker_detection.md | 22 +++++++++++-----------

ros2/example_1.md | 2 +-

ros2/example_2.md | 4 ++--

ros2/example_3.md | 2 +-

ros2/example_4.md | 2 +-

ros2/example_5.md | 6 +++---

ros2/example_6.md | 11 ++++++-----

ros2/example_7.md | 6 +++---

8 files changed, 28 insertions(+), 27 deletions(-)

diff --git a/ros2/aruco_marker_detection.md b/ros2/aruco_marker_detection.md

index fee5fff..fa28eeb 100644

--- a/ros2/aruco_marker_detection.md

+++ b/ros2/aruco_marker_detection.md

@@ -11,16 +11,16 @@ ros2 launch stretch_core stretch_driver.launch.py

To activate the RealSense camera and publish topics to be visualized, run the following launch file in a new terminal.

```{.bash .shell-prompt}

-ros2 launch stretch_core d435i_high_resolution.launch

+ros2 launch stretch_core d435i_high_resolution.launch.py

```

-Next, in a new terminal, run the stretch ArUco launch file which will bring up the [detect_aruco_markers](https://github.com/hello-robot/stretch_ros2/blob/iron/stretch_core/stretch_core/detect_aruco_markers.py) node.

+Next, in a new terminal, run the stretch ArUco launch file which will bring up the [detect_aruco_markers](https://github.com/hello-robot/stretch_ros2/blob/humble/stretch_core/stretch_core/detect_aruco_markers.py) node.

```{.bash .shell-prompt}

ros2 launch stretch_core stretch_aruco.launch.py

```

-Within this tutorial package, there is an [RViz config file](https://github.com/hello-robot/stretch_tutorials/blob/iron/rviz/aruco_detector_example.rviz) with the topics for the transform frames in the Display tree. You can visualize these topics and the robot model by running the command below in a new terminal.

+Within this tutorial package, there is an [RViz config file](https://github.com/hello-robot/stretch_tutorials/blob/humble/rviz/aruco_detector_example.rviz) with the topics for the transform frames in the Display tree. You can visualize these topics and the robot model by running the command below in a new terminal.

```{.bash .shell-prompt}

ros2 run rviz2 rviz2 -d /home/hello-robot/ament_ws/src/stretch_tutorials/rviz/aruco_detector_example.rviz

@@ -37,9 +37,9 @@ ros2 run stretch_core keyboard_teleop

@@ -30,9 +30,11 @@ import rclpy

import hello_helpers.hello_misc as hm

import os

import csv

+import time

import pandas as pd

+import matplotlib

+matplotlib.use('tkagg')

import matplotlib.pyplot as plt

-import time

from matplotlib import animation

from datetime import datetime

from control_msgs.action import FollowJointTrajectory

@@ -46,6 +48,8 @@ class JointActuatorEffortSensor(hm.HelloNode):

self.joint_effort = []

self.save_path = '/home/hello-robot/ament_ws/src/stretch_tutorials/stored_data'

self.export_data = export_data

+ self.result = False

+ self.file_name = datetime.now().strftime("effort_sensing_tutorial_%Y%m%d%I")

def issue_command(self):

@@ -59,6 +63,8 @@ class JointActuatorEffortSensor(hm.HelloNode):

trajectory_goal.trajectory.header.stamp = self.get_clock().now().to_msg()

trajectory_goal.trajectory.header.frame_id = 'base_link'

+ while self.joint_state is None:

+ time.sleep(0.1)

self._send_goal_future = self.trajectory_client.send_goal_async(trajectory_goal, self.feedback_callback)

self.get_logger().info('Sent position goal = {0}'.format(trajectory_goal))

self._send_goal_future.add_done_callback(self.goal_response_callback)

@@ -75,8 +81,8 @@ class JointActuatorEffortSensor(hm.HelloNode):

self._get_result_future.add_done_callback(self.get_result_callback)

def get_result_callback(self, future):

- result = future.result().result

- self.get_logger().info('Sent position goal = {0}'.format(result))

+ self.result = future.result().result

+ self.get_logger().info('Sent position goal = {0}'.format(self.result))

def feedback_callback(self, feedback_msg):

if 'wrist_extension' in self.joints:

@@ -93,9 +99,9 @@ class JointActuatorEffortSensor(hm.HelloNode):

print("effort: " + str(current_effort))

else:

self.joint_effort.append(current_effort)

-

+

if self.export_data:

- file_name = datetime.now().strftime("%Y-%m-%d_%I-%p")

+ file_name = self.file_name

completeName = os.path.join(self.save_path, file_name)

with open(completeName, "w") as f:

writer = csv.writer(f)

@@ -103,8 +109,9 @@ class JointActuatorEffortSensor(hm.HelloNode):

writer.writerows(self.joint_effort)

def plot_data(self, animate = True):

- time.sleep(0.1)

- file_name = datetime.now().strftime("%Y-%m-%d_%I-%p")

+ while not self.result:

+ time.sleep(0.1)

+ file_name = self.file_name

self.completeName = os.path.join(self.save_path, file_name)

self.data = pd.read_csv(self.completeName)

self.y_anim = []

@@ -156,7 +163,6 @@ def main():

node.plot_data()

node.new_thread.join()

-

except KeyboardInterrupt:

node.get_logger().info('interrupt received, so shutting down')

node.destroy_node()

@@ -165,6 +171,7 @@ def main():

if __name__ == '__main__':

main()

+

```

### The Code Explained

@@ -181,9 +188,11 @@ import rclpy

import hello_helpers.hello_misc as hm

import os

import csv

+import time

import pandas as pd

+import matplotlib

+matplotlib.use('tkagg')

import matplotlib.pyplot as plt

-import time

from matplotlib import animation

from datetime import datetime

from control_msgs.action import FollowJointTrajectory

@@ -211,9 +220,11 @@ Create a list of the desired joints you want to print.

self.joint_effort = []

self.save_path = '/home/hello-robot/ament_ws/src/stretch_tutorials/stored_data'

self.export_data = export_data

+self.result = False

+self.file_name = datetime.now().strftime("effort_sensing_tutorial_%Y%m%d%I")

```

-Create an empty list to store the joint effort values. The `self.save_path` is the directory path where the .txt file of the effort values will be stored. You can change this path to a preferred directory. The `self.export_data` is a boolean and its default value is set to `True`. If set to `False`, then the joint values will be printed in the terminal, otherwise, it will be stored in a .txt file and that's what we want to see the plot graph.

+Create an empty list to store the joint effort values. The `self.save_path` is the directory path where the .txt file of the effort values will be stored. You can change this path to a preferred directory. The `self.export_data` is a boolean and its default value is set to `True`. If set to `False`, then the joint values will be printed in the terminal, otherwise, it will be stored in a .txt file and that's what we want to see the plot graph. Also we want to give our text file a name since the beginning and we use the `self.file_name` to access this later.

```python

self._send_goal_future = self.trajectory_client.send_goal_async(trajectory_goal, self.feedback_callback)

@@ -243,7 +254,7 @@ We need the goal_handle to request the result with the method get_result_async.

```python

def get_result_callback(self, future):

- result = future.result().result

+ self.result = future.result().result

self.get_logger().info('Sent position goal = {0}'.format(result))

```

In the result callback we log the result of our poistion goal

@@ -283,25 +294,26 @@ Use a conditional statement to print effort values in the terminal or store valu

```python

if self.export_data:

- file_name = datetime.now().strftime("%Y-%m-%d_%I-%p")

+ file_name = self.file_name

completeName = os.path.join(self.save_path, file_name)

with open(completeName, "w") as f:

writer = csv.writer(f)

writer.writerow(self.joints)

writer.writerows(self.joint_effort)

```

-A conditional statement is used to export the data to a .txt file. The file's name is set to the date and time the node was executed.

+A conditional statement is used to export the data to a .txt file. The file's name is set to the one we created at the beginning.

```python

def plot_data(self, animate = True):

- time.sleep(0.1)

- file_name = datetime.now().strftime("%Y-%m-%d_%I-%p")

+ while not self.result:

+ time.sleep(0.1)

+ file_name = self.file_name

self.completeName = os.path.join(self.save_path, file_name)

self.data = pd.read_csv(self.completeName)

self.y_anim = []

self.animate = animate

```

-This function will help us initialize some values to plot our data, the file is going to be the one we created and we need to create an empty list for the animation

+This function will help us initialize some values to plot our data, we need to wait until we get the results to start plotting, because the file could still be storing values and we want to plot every point also we need to create an empty list for the animation.

```python

for joint in self.data.columns:

From 216f8bc229b952bdfab9251ce9016430255fd08b Mon Sep 17 00:00:00 2001

From: hello-jesus

Date: Mon, 18 Sep 2023 11:57:20 -0700

Subject: [PATCH 07/15] Update links to Humble documentation

---

ros2/aruco_marker_detection.md | 22 +++++++++++-----------

ros2/example_1.md | 2 +-

ros2/example_2.md | 4 ++--

ros2/example_3.md | 2 +-

ros2/example_4.md | 2 +-

ros2/example_5.md | 6 +++---

ros2/example_6.md | 11 ++++++-----

ros2/example_7.md | 6 +++---

8 files changed, 28 insertions(+), 27 deletions(-)

diff --git a/ros2/aruco_marker_detection.md b/ros2/aruco_marker_detection.md

index fee5fff..fa28eeb 100644

--- a/ros2/aruco_marker_detection.md

+++ b/ros2/aruco_marker_detection.md

@@ -11,16 +11,16 @@ ros2 launch stretch_core stretch_driver.launch.py

To activate the RealSense camera and publish topics to be visualized, run the following launch file in a new terminal.

```{.bash .shell-prompt}

-ros2 launch stretch_core d435i_high_resolution.launch

+ros2 launch stretch_core d435i_high_resolution.launch.py

```

-Next, in a new terminal, run the stretch ArUco launch file which will bring up the [detect_aruco_markers](https://github.com/hello-robot/stretch_ros2/blob/iron/stretch_core/stretch_core/detect_aruco_markers.py) node.

+Next, in a new terminal, run the stretch ArUco launch file which will bring up the [detect_aruco_markers](https://github.com/hello-robot/stretch_ros2/blob/humble/stretch_core/stretch_core/detect_aruco_markers.py) node.

```{.bash .shell-prompt}

ros2 launch stretch_core stretch_aruco.launch.py

```

-Within this tutorial package, there is an [RViz config file](https://github.com/hello-robot/stretch_tutorials/blob/iron/rviz/aruco_detector_example.rviz) with the topics for the transform frames in the Display tree. You can visualize these topics and the robot model by running the command below in a new terminal.

+Within this tutorial package, there is an [RViz config file](https://github.com/hello-robot/stretch_tutorials/blob/humble/rviz/aruco_detector_example.rviz) with the topics for the transform frames in the Display tree. You can visualize these topics and the robot model by running the command below in a new terminal.

```{.bash .shell-prompt}

ros2 run rviz2 rviz2 -d /home/hello-robot/ament_ws/src/stretch_tutorials/rviz/aruco_detector_example.rviz

@@ -37,9 +37,9 @@ ros2 run stretch_core keyboard_teleop

### The ArUco Marker Dictionary

-When defining the ArUco markers on Stretch, hello robot utilizes a YAML file, [stretch_marker_dict.yaml](https://github.com/hello-robot/stretch_ros2/blob/iron/stretch_core/config/stretch_marker_dict.yaml), that holds the information about the markers.

+When defining the ArUco markers on Stretch, hello robot utilizes a YAML file, [stretch_marker_dict.yaml](https://github.com/hello-robot/stretch_ros2/blob/humble/stretch_core/config/stretch_marker_dict.yaml), that holds the information about the markers.

-If [detect_aruco_markers](https://github.com/hello-robot/stretch_ros2/blob/iron/stretch_core/stretch_core/detect_aruco_markers.py) node doesn’t find an entry in [stretch_marker_dict.yaml](https://github.com/hello-robot/stretch_ros2/blob/iron/stretch_core/config/stretch_marker_dict.yaml) for a particular ArUco marker ID number, it uses the default entry. For example, most robots have shipped with the following default entry:

+If [detect_aruco_markers](https://github.com/hello-robot/stretch_ros2/blob/humble/stretch_core/stretch_core/detect_aruco_markers.py) node doesn’t find an entry in [stretch_marker_dict.yaml](https://github.com/hello-robot/stretch_ros2/blob/humble/stretch_core/config/stretch_marker_dict.yaml) for a particular ArUco marker ID number, it uses the default entry. For example, most robots have shipped with the following default entry:

```yaml

'default':

@@ -72,7 +72,7 @@ and the following entry for the ArUco marker on the top of the wrist

'length_mm': 23.5

```

-The `length_mm` value used by [detect_aruco_markers](https://github.com/hello-robot/stretch_ros2/blob/iron/stretch_core/stretch_core/detect_aruco_markers.py) is important for estimating the pose of an ArUco marker.

+The `length_mm` value used by [detect_aruco_markers](https://github.com/hello-robot/stretch_ros2/blob/humble/stretch_core/stretch_core/detect_aruco_markers.py) is important for estimating the pose of an ArUco marker.

!!! note

If the actual width and height of the marker do not match this value, then pose estimation will be poor. Thus, carefully measure custom Aruco markers.

@@ -81,26 +81,26 @@ The `length_mm` value used by [detect_aruco_markers](https://github.com/hello-ro

'use_rgb_only': False

```

-If `use_rgb_only` is `True`, [detect_aruco_markers](https://github.com/hello-robot/stretch_ros2/blob/iron/stretch_core/stretch_core/detect_aruco_markers.py) will ignore depth images from the [Intel RealSense D435i depth camera](https://www.intelrealsense.com/depth-camera-d435i/) when estimating the pose of the marker and will instead only use RGB images from the D435i.

+If `use_rgb_only` is `True`, [detect_aruco_markers](https://github.com/hello-robot/stretch_ros2/blob/humble/stretch_core/stretch_core/detect_aruco_markers.py) will ignore depth images from the [Intel RealSense D435i depth camera](https://www.intelrealsense.com/depth-camera-d435i/) when estimating the pose of the marker and will instead only use RGB images from the D435i.

```yaml

'name': 'wrist_top'

```

-`name` is used for the text string of the ArUco marker’s [ROS Marker](http://docs.ros.org/en/melodic/api/visualization_msgs/html/msg/Marker.html) in the [ROS MarkerArray](http://docs.ros.org/en/melodic/api/visualization_msgs/html/msg/MarkerArray.html) Message published by the [detect_aruco_markers](https://github.com/hello-robot/stretch_ros2/blob/iron/stretch_core/stretch_core/detect_aruco_markers.py) ROS node.

+`name` is used for the text string of the ArUco marker’s [ROS Marker](http://docs.ros.org/en/melodic/api/visualization_msgs/html/msg/Marker.html) in the [ROS MarkerArray](http://docs.ros.org/en/melodic/api/visualization_msgs/html/msg/MarkerArray.html) Message published by the [detect_aruco_markers](https://github.com/hello-robot/stretch_ros2/blob/humble/stretch_core/stretch_core/detect_aruco_markers.py) ROS node.

```yaml

'link': 'link_aruco_top_wrist'

```

-`link` is currently used by [stretch_calibration](https://github.com/hello-robot/stretch_ros2/blob/iron/stretch_calibration/stretch_calibration/collect_head_calibration_data.py). It is the name of the link associated with a body-mounted ArUco marker in the [robot’s URDF](https://github.com/hello-robot/stretch_ros2/blob/iron/stretch_description/urdf/stretch_aruco.xacro).

+`link` is currently used by [stretch_calibration](https://github.com/hello-robot/stretch_ros2/blob/humble/stretch_calibration/stretch_calibration/collect_head_calibration_data.py). It is the name of the link associated with a body-mounted ArUco marker in the [robot’s URDF](https://github.com/hello-robot/stretch_ros2/blob/humble/stretch_description/urdf/stretch_aruco.xacro).

-It’s good practice to add an entry to [stretch_marker_dict.yaml](https://github.com/hello-robot/stretch_ros2/blob/iron/stretch_core/config/stretch_marker_dict.yaml) for each ArUco marker you use.

+It’s good practice to add an entry to [stretch_marker_dict.yaml](https://github.com/hello-robot/stretch_ros2/blob/humble/stretch_core/config/stretch_marker_dict.yaml) for each ArUco marker you use.

### Create a New ArUco Marker

At Hello Robot, we’ve used the following guide when generating new ArUco markers.

-We generate ArUco markers using a 6x6-bit grid (36 bits) with 250 unique codes. This corresponds with[ DICT_6X6_250 defined in OpenCV](https://docs.opencv.org/3.4/d9/d6a/group__aruco.html). We generate markers using this [online ArUco marker generator](https://chev.me/arucogen/) by setting the Dictionary entry to 6x6 and then setting the Marker ID and Marker size, mm as appropriate for the specific application. We strongly recommend measuring the actual marker by hand before adding an entry for it to [stretch_marker_dict.yaml](https://github.com/hello-robot/stretch_ros2/blob/iron/stretch_core/config/stretch_marker_dict.yaml).

+We generate ArUco markers using a 6x6-bit grid (36 bits) with 250 unique codes. This corresponds with[ DICT_6X6_250 defined in OpenCV](https://docs.opencv.org/3.4/d9/d6a/group__aruco.html). We generate markers using this [online ArUco marker generator](https://chev.me/arucogen/) by setting the Dictionary entry to 6x6 and then setting the Marker ID and Marker size, mm as appropriate for the specific application. We strongly recommend measuring the actual marker by hand before adding an entry for it to [stretch_marker_dict.yaml](https://github.com/hello-robot/stretch_ros2/blob/humble/stretch_core/config/stretch_marker_dict.yaml).

We select marker ID numbers using the following ranges.

diff --git a/ros2/example_1.md b/ros2/example_1.md

index b06ff94..bf949d4 100644

--- a/ros2/example_1.md

+++ b/ros2/example_1.md

@@ -62,7 +62,7 @@ Now let's break the code down.

```python

#!/usr/bin/env python3

```

-Every Python ROS [Node](http://wiki.ros.org/Nodes) will have this declaration at the top. The first line makes sure your script is executed as a Python script.

+Every Python ROS [Node](http://docs.ros.org/en/humble/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Nodes/Understanding-ROS2-Nodes.html) will have this declaration at the top. The first line makes sure your script is executed as a Python script.

```python

diff --git a/ros2/example_2.md b/ros2/example_2.md

index 14ab672..a9001e7 100644

--- a/ros2/example_2.md

+++ b/ros2/example_2.md

@@ -5,7 +5,7 @@

This example aims to provide instructions on how to filter scan messages.

-For robots with laser scanners, ROS provides a special Message type in the [sensor_msgs](https://github.com/ros2/common_interfaces/tree/iron/sensor_msgs) package called [LaserScan](https://github.com/ros2/common_interfaces/blob/iron/sensor_msgs/msg/LaserScan.msg) to hold information about a given scan. Let's take a look at the message specification itself:

+For robots with laser scanners, ROS provides a special Message type in the [sensor_msgs](https://github.com/ros2/common_interfaces/tree/humble/sensor_msgs) package called [LaserScan](https://github.com/ros2/common_interfaces/blob/humble/sensor_msgs/msg/LaserScan.msg) to hold information about a given scan. Let's take a look at the message specification itself:

```{.bash .no-copy}

# Laser scans angles are measured counter clockwise,

@@ -109,7 +109,7 @@ Now let's break the code down.

```python

#!/usr/bin/env python3

```

-Every Python ROS [Node](http://wiki.ros.org/Nodes) will have this declaration at the top. The first line makes sure your script is executed as a Python script.

+Every Python ROS [Node](http://docs.ros.org/en/humble/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Nodes/Understanding-ROS2-Nodes.html) will have this declaration at the top. The first line makes sure your script is executed as a Python script.

```python

import rclpy

diff --git a/ros2/example_3.md b/ros2/example_3.md

index d6d92c0..588e2d2 100644

--- a/ros2/example_3.md

+++ b/ros2/example_3.md

@@ -77,7 +77,7 @@ Now let's break the code down.

#!/usr/bin/env python3

```

-Every Python ROS [Node](http://wiki.ros.org/Nodes) will have this declaration at the top. The first line makes sure your script is executed as a Python script.

+Every Python ROS [Node](http://docs.ros.org/en/humble/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Nodes/Understanding-ROS2-Nodes.html) will have this declaration at the top. The first line makes sure your script is executed as a Python script.

```python

diff --git a/ros2/example_4.md b/ros2/example_4.md

index 8e8db34..c2143da 100644

--- a/ros2/example_4.md

+++ b/ros2/example_4.md

@@ -71,7 +71,7 @@ Now let's break the code down.

#!/usr/bin/env python3

```

-Every Python ROS [Node](http://wiki.ros.org/Nodes) will have this declaration at the top. The first line makes sure your script is executed as a Python script.

+Every Python ROS [Node](http://docs.ros.org/en/humble/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Nodes/Understanding-ROS2-Nodes.html) will have this declaration at the top. The first line makes sure your script is executed as a Python script.

```python

import rclpy

diff --git a/ros2/example_5.md b/ros2/example_5.md

index ad45adf..43aed76 100644

--- a/ros2/example_5.md

+++ b/ros2/example_5.md

@@ -1,7 +1,7 @@

## Example 5

In this example, we will review a Python script that prints out the positions of a selected group of Stretch joints. This script is helpful if you need the joint positions after you teleoperated Stretch with the Xbox controller or physically moved the robot to the desired configuration after hitting the run stop button.

-If you are looking for a continuous print of the joint states while Stretch is in action, then you can use the [ros2 topic command-line tool](https://docs.ros.org/en/iron/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Topics/Understanding-ROS2-Topics.html) shown in the [Internal State of Stretch Tutorial](https://github.com/hello-robot/stretch_tutorials/blob/master/ros2/internal_state_of_stretch.md).

+If you are looking for a continuous print of the joint states while Stretch is in action, then you can use the [ros2 topic command-line tool](https://docs.ros.org/en/humble/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Topics/Understanding-ROS2-Topics.html) shown in the [Internal State of Stretch Tutorial](https://github.com/hello-robot/stretch_tutorials/blob/master/ros2/internal_state_of_stretch.md).

Begin by starting up the stretch driver launch file.

@@ -9,7 +9,7 @@ Begin by starting up the stretch driver launch file.

ros2 launch stretch_core stretch_driver.launch.py

```

-You can then hit the run-stop button (you should hear a beep and the LED light in the button blink) and move the robot's joints to a desired configuration. Once you are satisfied with the configuration, hold the run-stop button until you hear a beep. Then run the following command to execute the [joint_state_printer.py](https://github.com/hello-robot/stretch_tutorials/blob/iron/stretch_ros_tutorials/joint_state_printer.py) which will print the joint positions of the lift, arm, and wrist. In a new terminal, execute:

+You can then hit the run-stop button (you should hear a beep and the LED light in the button blink) and move the robot's joints to a desired configuration. Once you are satisfied with the configuration, hold the run-stop button until you hear a beep. Then run the following command to execute the [joint_state_printer.py](https://github.com/hello-robot/stretch_tutorials/blob/humble/stretch_ros_tutorials/joint_state_printer.py) which will print the joint positions of the lift, arm, and wrist. In a new terminal, execute:

```{.bash .shell-prompt}

cd ament_ws/src/stretch_tutorials/stretch_ros_tutorials/

@@ -130,7 +130,7 @@ Now let's break the code down.

#!/usr/bin/env python3

```

-Every Python ROS [Node](https://docs.ros.org/en/iron/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Nodes/Understanding-ROS2-Nodes.html) will have this declaration at the top. The first line makes sure your script is executed as a Python3 script.

+Every Python ROS [Node](http://docs.ros.org/en/humble/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Nodes/Understanding-ROS2-Nodes.html) will have this declaration at the top. The first line makes sure your script is executed as a Python3 script.

```python

import rclpy

diff --git a/ros2/example_6.md b/ros2/example_6.md

index c14a14e..c1f93ae 100644

--- a/ros2/example_6.md

+++ b/ros2/example_6.md

@@ -1,6 +1,6 @@

## Example 6

-In this example, we will review a Python script that prints and stores the effort values from a specified joint. If you are looking for a continuous print of the joint state efforts while Stretch is in action, then you can use the [ros2 topic command-line tool](https://docs.ros.org/en/iron/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Topics/Understanding-ROS2-Topics.html) shown in the [Internal State of Stretch Tutorial](https://github.com/hello-robot/stretch_tutorials/blob/master/ros2/internal_state_of_stretch.md).

+In this example, we will review a Python script that prints and stores the effort values from a specified joint. If you are looking for a continuous print of the joint state efforts while Stretch is in action, then you can use the [ros2 topic command-line tool](https://docs.ros.org/en/humble/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Topics/Understanding-ROS2-Topics.html) shown in the [Internal State of Stretch Tutorial](https://github.com/hello-robot/stretch_tutorials/blob/master/ros2/internal_state_of_stretch.md).

@@ -12,7 +12,7 @@ Begin by running the following command in a terminal.

ros2 launch stretch_core stretch_driver.launch.py

```

-There's no need to switch to the position mode in comparison with ROS1 because the default mode of the launcher is this position mode. Then run the [effort_sensing.py](https://github.com/hello-robot/stretch_tutorials/blob/iron/stretch_ros_tutorials/effort_sensing.py) node. In a new terminal, execute:

+There's no need to switch to the position mode in comparison with ROS1 because the default mode of the launcher is this position mode. Then run the [effort_sensing.py](https://github.com/hello-robot/stretch_tutorials/blob/humble/stretch_ros_tutorials/effort_sensing.py) node. In a new terminal, execute:

```{.bash .shell-prompt}

cd ament_ws/src/stretch_tutorials/stretch_ros_tutorials/

@@ -175,13 +175,14 @@ if __name__ == '__main__':

```

### The Code Explained

-This code is similar to that of the [multipoint_command](https://github.com/hello-robot/stretch_tutorials/blob/iron/stretch_ros_tutorials/multipoint_command.py) and [joint_state_printer](https://github.com/hello-robot/stretch_tutorials/blob/iron/stretch_ros_tutorials/joint_state_printer.py) node. Therefore, this example will highlight sections that are different from those tutorials. Now let's break the code down.

+This code is similar to that of the [multipoint_command](https://github.com/hello-robot/stretch_tutorials/blob/humble/stretch_ros_tutorials/multipoint_command.py) and [joint_state_printer](https://github.com/hello-robot/stretch_tutorials/blob/humble/stretch_ros_tutorials/joint_state_printer.py) node. Therefore, this example will highlight sections that are different from those tutorials. Now let's break the code down.

```python

#!/usr/bin/env python3

```

-Every Python ROS [Node](https://docs.ros.org/en/iron/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Nodes/Understanding-ROS2-Nodes.html) will have this declaration at the top. The first line makes sure your script is executed as a Python3 script.

+Every Python ROS [Node](http://docs.ros.org/en/humble/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Nodes/Understanding-ROS2-Nodes.html

+) will have this declaration at the top. The first line makes sure your script is executed as a Python3 script.

```python

import rclpy

@@ -199,7 +200,7 @@ from control_msgs.action import FollowJointTrajectory

from trajectory_msgs.msg import JointTrajectoryPoint

```

-You need to import rclpy if you are writing a ROS [Node](https://docs.ros.org/en/iron/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Nodes/Understanding-ROS2-Nodes.html). Import the `FollowJointTrajectory` from the `control_msgs.action` package to control the Stretch robot. Import `JointTrajectoryPoint` from the `trajectory_msgs` package to define robot trajectories. The `hello_helpers` package consists of a module that provides various Python scripts used across [stretch_ros](https://github.com/hello-robot/stretch_ros2). In this instance, we are importing the `hello_misc` script.

+You need to import rclpy if you are writing a ROS [Node](http://docs.ros.org/en/humble/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Nodes/Understanding-ROS2-Nodes.html). Import the `FollowJointTrajectory` from the `control_msgs.action` package to control the Stretch robot. Import `JointTrajectoryPoint` from the `trajectory_msgs` package to define robot trajectories. The `hello_helpers` package consists of a module that provides various Python scripts used across [stretch_ros](https://github.com/hello-robot/stretch_ros2). In this instance, we are importing the `hello_misc` script.

```Python

class JointActuatorEffortSensor(hm.HelloNode):

diff --git a/ros2/example_7.md b/ros2/example_7.md

index 66245f5..1d0db78 100644

--- a/ros2/example_7.md

+++ b/ros2/example_7.md

@@ -28,7 +28,7 @@ ros2 run rviz2 rviz2 -d /home/hello-robot/ament_ws/src/stretch_tutorials/rviz/pe

```

## Capture Image with Python Script

-In this section, we will use a Python node to capture an image from the [RealSense camera](https://www.intelrealsense.com/depth-camera-d435i/). Execute the [capture_image.py](stretch_ros_tutorials/capture_image.py) node to save a .jpeg image of the image topic `/camera/color/image_raw`. In a terminal, execute:

+In this section, we will use a Python node to capture an image from the [RealSense camera](https://www.intelrealsense.com/depth-camera-d435i/). Execute the [capture_image.py](https://github.com/hello-robot/stretch_tutorials/blob/humble/stretch_ros_tutorials/capture_image.py) node to save a .jpeg image of the image topic `/camera/color/image_raw`. In a terminal, execute:

```{.bash .shell-prompt}

cd ~/ament_ws/src/stretch_tutorials/stretch_ros_tutorials

@@ -99,7 +99,7 @@ Now let's break the code down.

```python

#!/usr/bin/env python3

```

-Every Python ROS [Node](https://docs.ros.org/en/iron/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Nodes/Understanding-ROS2-Nodes.html) will have this declaration at the top. The first line makes sure your script is executed as a Python3 script.

+Every Python ROS [Node](http://docs.ros.org/en/humble/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Nodes/Understanding-ROS2-Nodes.html) will have this declaration at the top. The first line makes sure your script is executed as a Python3 script.

```python

import rclpy

@@ -108,7 +108,7 @@ import os

import cv2

```

-You need to import `rclpy` if you are writing a ROS [Node](https://docs.ros.org/en/iron/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Nodes/Understanding-ROS2-Nodes.html). There are functions from `sys`, `os`, and `cv2` that are required within this code. `cv2` is a library of Python functions that implements computer vision algorithms. Further information about cv2 can be found here: [OpenCV Python](https://www.geeksforgeeks.org/opencv-python-tutorial/).

+You need to import `rclpy` if you are writing a ROS [Node](http://docs.ros.org/en/humble/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Nodes/Understanding-ROS2-Nodes.html). There are functions from `sys`, `os`, and `cv2` that are required within this code. `cv2` is a library of Python functions that implements computer vision algorithms. Further information about cv2 can be found here: [OpenCV Python](https://www.geeksforgeeks.org/opencv-python-tutorial/).

```python

from rclpy.node import Node

From e3f6d0171c99f13e8b1613f0802f93bb1c5a0e00 Mon Sep 17 00:00:00 2001

From: hello-jesus

Date: Mon, 18 Sep 2023 13:01:45 -0700

Subject: [PATCH 08/15] Update links to Humble documentation

---

ros2/align_to_aruco.md | 6 +++---

ros2/example_10.md | 2 +-

ros2/example_12.md | 14 +++++++-------

ros2/follow_joint_trajectory.md | 4 ++--

ros2/navigation_simple_commander.md | 8 ++++----

ros2/obstacle_avoider.md | 4 ++--

6 files changed, 19 insertions(+), 19 deletions(-)

diff --git a/ros2/align_to_aruco.md b/ros2/align_to_aruco.md

index ec6a0b5..65e60ab 100644

--- a/ros2/align_to_aruco.md

+++ b/ros2/align_to_aruco.md

@@ -4,7 +4,7 @@ ArUco markers are a type of fiducials that are used extensively in robotics for

## ArUco Detection

Stretch uses the OpenCV ArUco detection library and is configured to identify a specific set of ArUco markers belonging to the 6x6, 250 dictionary. To understand why this is important, please refer to [this](https://docs.opencv.org/4.x/d5/dae/tutorial_aruco_detection.html) handy guide provided by OpenCV.

-Stretch comes preconfigured to identify ArUco markers. The ROS node that enables this is the detect_aruco_markers [node](https://github.com/hello-robot/stretch_ros2/blob/iron/stretch_core/stretch_core/detect_aruco_markers.py) in the stretch_core package. Thanks to this node, identifying and estimating the pose of a marker is as easy as pointing the camera at the marker and running the detection node. It is also possible and quite convenient to visualize the detections with RViz.

+Stretch comes preconfigured to identify ArUco markers. The ROS node that enables this is the detect_aruco_markers [node](https://github.com/hello-robot/stretch_ros2/blob/humble/stretch_core/stretch_core/detect_aruco_markers.py) in the stretch_core package. Thanks to this node, identifying and estimating the pose of a marker is as easy as pointing the camera at the marker and running the detection node. It is also possible and quite convenient to visualize the detections with RViz.

## Computing Transformations

If you have not already done so, now might be a good time to review the [tf listener](https://docs.hello-robot.com/0.2/stretch-tutorials/ros2/example_10/) tutorial. Go on, we can wait…

@@ -50,7 +50,7 @@ ros2 launch stretch_core align_to_aruco.launch.py

@@ -12,7 +12,7 @@ Begin by running the following command in a terminal.

ros2 launch stretch_core stretch_driver.launch.py

```

-There's no need to switch to the position mode in comparison with ROS1 because the default mode of the launcher is this position mode. Then run the [effort_sensing.py](https://github.com/hello-robot/stretch_tutorials/blob/iron/stretch_ros_tutorials/effort_sensing.py) node. In a new terminal, execute:

+There's no need to switch to the position mode in comparison with ROS1 because the default mode of the launcher is this position mode. Then run the [effort_sensing.py](https://github.com/hello-robot/stretch_tutorials/blob/humble/stretch_ros_tutorials/effort_sensing.py) node. In a new terminal, execute:

```{.bash .shell-prompt}

cd ament_ws/src/stretch_tutorials/stretch_ros_tutorials/

@@ -175,13 +175,14 @@ if __name__ == '__main__':

```

### The Code Explained

-This code is similar to that of the [multipoint_command](https://github.com/hello-robot/stretch_tutorials/blob/iron/stretch_ros_tutorials/multipoint_command.py) and [joint_state_printer](https://github.com/hello-robot/stretch_tutorials/blob/iron/stretch_ros_tutorials/joint_state_printer.py) node. Therefore, this example will highlight sections that are different from those tutorials. Now let's break the code down.

+This code is similar to that of the [multipoint_command](https://github.com/hello-robot/stretch_tutorials/blob/humble/stretch_ros_tutorials/multipoint_command.py) and [joint_state_printer](https://github.com/hello-robot/stretch_tutorials/blob/humble/stretch_ros_tutorials/joint_state_printer.py) node. Therefore, this example will highlight sections that are different from those tutorials. Now let's break the code down.

```python

#!/usr/bin/env python3

```

-Every Python ROS [Node](https://docs.ros.org/en/iron/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Nodes/Understanding-ROS2-Nodes.html) will have this declaration at the top. The first line makes sure your script is executed as a Python3 script.

+Every Python ROS [Node](http://docs.ros.org/en/humble/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Nodes/Understanding-ROS2-Nodes.html

+) will have this declaration at the top. The first line makes sure your script is executed as a Python3 script.

```python

import rclpy

@@ -199,7 +200,7 @@ from control_msgs.action import FollowJointTrajectory

from trajectory_msgs.msg import JointTrajectoryPoint

```

-You need to import rclpy if you are writing a ROS [Node](https://docs.ros.org/en/iron/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Nodes/Understanding-ROS2-Nodes.html). Import the `FollowJointTrajectory` from the `control_msgs.action` package to control the Stretch robot. Import `JointTrajectoryPoint` from the `trajectory_msgs` package to define robot trajectories. The `hello_helpers` package consists of a module that provides various Python scripts used across [stretch_ros](https://github.com/hello-robot/stretch_ros2). In this instance, we are importing the `hello_misc` script.

+You need to import rclpy if you are writing a ROS [Node](http://docs.ros.org/en/humble/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Nodes/Understanding-ROS2-Nodes.html). Import the `FollowJointTrajectory` from the `control_msgs.action` package to control the Stretch robot. Import `JointTrajectoryPoint` from the `trajectory_msgs` package to define robot trajectories. The `hello_helpers` package consists of a module that provides various Python scripts used across [stretch_ros](https://github.com/hello-robot/stretch_ros2). In this instance, we are importing the `hello_misc` script.

```Python

class JointActuatorEffortSensor(hm.HelloNode):

diff --git a/ros2/example_7.md b/ros2/example_7.md

index 66245f5..1d0db78 100644

--- a/ros2/example_7.md

+++ b/ros2/example_7.md

@@ -28,7 +28,7 @@ ros2 run rviz2 rviz2 -d /home/hello-robot/ament_ws/src/stretch_tutorials/rviz/pe

```

## Capture Image with Python Script

-In this section, we will use a Python node to capture an image from the [RealSense camera](https://www.intelrealsense.com/depth-camera-d435i/). Execute the [capture_image.py](stretch_ros_tutorials/capture_image.py) node to save a .jpeg image of the image topic `/camera/color/image_raw`. In a terminal, execute:

+In this section, we will use a Python node to capture an image from the [RealSense camera](https://www.intelrealsense.com/depth-camera-d435i/). Execute the [capture_image.py](https://github.com/hello-robot/stretch_tutorials/blob/humble/stretch_ros_tutorials/capture_image.py) node to save a .jpeg image of the image topic `/camera/color/image_raw`. In a terminal, execute:

```{.bash .shell-prompt}

cd ~/ament_ws/src/stretch_tutorials/stretch_ros_tutorials

@@ -99,7 +99,7 @@ Now let's break the code down.

```python

#!/usr/bin/env python3

```

-Every Python ROS [Node](https://docs.ros.org/en/iron/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Nodes/Understanding-ROS2-Nodes.html) will have this declaration at the top. The first line makes sure your script is executed as a Python3 script.

+Every Python ROS [Node](http://docs.ros.org/en/humble/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Nodes/Understanding-ROS2-Nodes.html) will have this declaration at the top. The first line makes sure your script is executed as a Python3 script.

```python

import rclpy

@@ -108,7 +108,7 @@ import os

import cv2

```

-You need to import `rclpy` if you are writing a ROS [Node](https://docs.ros.org/en/iron/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Nodes/Understanding-ROS2-Nodes.html). There are functions from `sys`, `os`, and `cv2` that are required within this code. `cv2` is a library of Python functions that implements computer vision algorithms. Further information about cv2 can be found here: [OpenCV Python](https://www.geeksforgeeks.org/opencv-python-tutorial/).

+You need to import `rclpy` if you are writing a ROS [Node](http://docs.ros.org/en/humble/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Nodes/Understanding-ROS2-Nodes.html). There are functions from `sys`, `os`, and `cv2` that are required within this code. `cv2` is a library of Python functions that implements computer vision algorithms. Further information about cv2 can be found here: [OpenCV Python](https://www.geeksforgeeks.org/opencv-python-tutorial/).

```python

from rclpy.node import Node

From e3f6d0171c99f13e8b1613f0802f93bb1c5a0e00 Mon Sep 17 00:00:00 2001

From: hello-jesus

Date: Mon, 18 Sep 2023 13:01:45 -0700

Subject: [PATCH 08/15] Update links to Humble documentation

---

ros2/align_to_aruco.md | 6 +++---

ros2/example_10.md | 2 +-

ros2/example_12.md | 14 +++++++-------

ros2/follow_joint_trajectory.md | 4 ++--

ros2/navigation_simple_commander.md | 8 ++++----

ros2/obstacle_avoider.md | 4 ++--

6 files changed, 19 insertions(+), 19 deletions(-)

diff --git a/ros2/align_to_aruco.md b/ros2/align_to_aruco.md

index ec6a0b5..65e60ab 100644

--- a/ros2/align_to_aruco.md

+++ b/ros2/align_to_aruco.md

@@ -4,7 +4,7 @@ ArUco markers are a type of fiducials that are used extensively in robotics for

## ArUco Detection

Stretch uses the OpenCV ArUco detection library and is configured to identify a specific set of ArUco markers belonging to the 6x6, 250 dictionary. To understand why this is important, please refer to [this](https://docs.opencv.org/4.x/d5/dae/tutorial_aruco_detection.html) handy guide provided by OpenCV.

-Stretch comes preconfigured to identify ArUco markers. The ROS node that enables this is the detect_aruco_markers [node](https://github.com/hello-robot/stretch_ros2/blob/iron/stretch_core/stretch_core/detect_aruco_markers.py) in the stretch_core package. Thanks to this node, identifying and estimating the pose of a marker is as easy as pointing the camera at the marker and running the detection node. It is also possible and quite convenient to visualize the detections with RViz.

+Stretch comes preconfigured to identify ArUco markers. The ROS node that enables this is the detect_aruco_markers [node](https://github.com/hello-robot/stretch_ros2/blob/humble/stretch_core/stretch_core/detect_aruco_markers.py) in the stretch_core package. Thanks to this node, identifying and estimating the pose of a marker is as easy as pointing the camera at the marker and running the detection node. It is also possible and quite convenient to visualize the detections with RViz.

## Computing Transformations

If you have not already done so, now might be a good time to review the [tf listener](https://docs.hello-robot.com/0.2/stretch-tutorials/ros2/example_10/) tutorial. Go on, we can wait…

@@ -50,7 +50,7 @@ ros2 launch stretch_core align_to_aruco.launch.py

## Code Breakdown

-Let's jump into the code to see how things work under the hood. Follow along [here](https://github.com/hello-robot/stretch_ros2/blob/iron/stretch_core/stretch_core/align_to_aruco.py) to have a look at the entire script.

+Let's jump into the code to see how things work under the hood. Follow along [here](https://github.com/hello-robot/stretch_ros2/blob/humble/stretch_core/stretch_core/align_to_aruco.py) to have a look at the entire script.

We make use of two separate Python classes for this demo. The FrameListener class is derived from the Node class and is the place where we compute the TF transformations. For an explantion of this class, you can refer to the [TF listener](https://docs.hello-robot.com/0.2/stretch-tutorials/ros2/example_10/) tutorial.

```python

@@ -118,4 +118,4 @@ The align_to_marker() method is where we command Stretch to the pose goal in thr

def align_to_marker(self):

```

-If you want to work with a different ArUco marker than the one we used in this tutorial, you can do so by changing line 44 in the [code](https://github.com/hello-robot/stretch_ros2/blob/iron/stretch_core/stretch_core/align_to_aruco.py#L44) to the one you wish to detect. Also, don't forget to add the marker in the [stretch_marker_dict.yaml](https://github.com/hello-robot/stretch_ros2/blob/iron/stretch_core/config/stretch_marker_dict.yaml) ArUco marker dictionary.

+If you want to work with a different ArUco marker than the one we used in this tutorial, you can do so by changing line 44 in the [code](https://github.com/hello-robot/stretch_ros2/blob/humble/stretch_core/stretch_core/align_to_aruco.py#L44) to the one you wish to detect. Also, don't forget to add the marker in the [stretch_marker_dict.yaml](https://github.com/hello-robot/stretch_ros2/blob/humble/stretch_core/config/stretch_marker_dict.yaml) ArUco marker dictionary.

diff --git a/ros2/example_10.md b/ros2/example_10.md

index 7a2a5de..b556cf8 100644

--- a/ros2/example_10.md

+++ b/ros2/example_10.md

@@ -137,7 +137,7 @@ Now let's break the code down.

#!/usr/bin/env python3

```

-Every Python ROS [Node](http://wiki.ros.org/Nodes) will have this declaration at the top. The first line makes sure your script is executed as a Python script.

+Every Python ROS [Node](http://docs.ros.org/en/humble/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Nodes/Understanding-ROS2-Nodes.html) will have this declaration at the top. The first line makes sure your script is executed as a Python script.

```python

import rclpy

diff --git a/ros2/example_12.md b/ros2/example_12.md

index 3f7ec1b..0da73b1 100644

--- a/ros2/example_12.md

+++ b/ros2/example_12.md

@@ -2,9 +2,9 @@

For this example, we will send follow joint trajectory commands for the head camera to search and locate an ArUco tag. In this instance, a Stretch robot will try to locate the docking station's ArUco tag.

## Modifying Stretch Marker Dictionary YAML File

-When defining the ArUco markers on Stretch, hello robot utilizes a YAML file, [stretch_marker_dict.yaml](https://github.com/hello-robot/stretch_ros2/blob/iron/stretch_core/config/stretch_marker_dict.yaml), that holds the information about the markers. A further breakdown of the YAML file can be found in our [Aruco Marker Detection](aruco_marker_detection.md) tutorial.

+When defining the ArUco markers on Stretch, hello robot utilizes a YAML file, [stretch_marker_dict.yaml](https://github.com/hello-robot/stretch_ros2/blob/humble/stretch_core/config/stretch_marker_dict.yaml), that holds the information about the markers. A further breakdown of the YAML file can be found in our [Aruco Marker Detection](aruco_marker_detection.md) tutorial.

-Below is what needs to be included in the [stretch_marker_dict.yaml](https://github.com/hello-robot/stretch_ros2/blob/iron/stretch_core/config/stretch_marker_dict.yaml) file so the [detect_aruco_markers](https://github.com/hello-robot/stretch_ros2/blob/iron/stretch_core/stretch_core/detect_aruco_markers.py) node can find the docking station's ArUco tag.

+Below is what needs to be included in the [stretch_marker_dict.yaml](https://github.com/hello-robot/stretch_ros2/blob/humble/stretch_core/config/stretch_marker_dict.yaml) file so the [detect_aruco_markers](https://github.com/hello-robot/stretch_ros2/blob/humble/stretch_core/stretch_core/detect_aruco_markers.py) node can find the docking station's ArUco tag.

```yaml

'245':

@@ -27,19 +27,19 @@ To activate the RealSense camera and publish topics to be visualized, run the fo

ros2 launch stretch_core d435i_high_resolution.launch.py

```

-Next, run the stretch ArUco launch file which will bring up the [detect_aruco_markers](https://github.com/hello-robot/stretch_ros2/blob/iron/stretch_core/stretch_core/detect_aruco_markers.py) node. In a new terminal, execute:

+Next, run the stretch ArUco launch file which will bring up the [detect_aruco_markers](https://github.com/hello-robot/stretch_ros2/blob/humble/stretch_core/stretch_core/detect_aruco_markers.py) node. In a new terminal, execute:

```{.bash .shell-prompt}

ros2 launch stretch_core stretch_aruco.launch.py

```

-Within this tutorial package, there is an [RViz config file](https://github.com/hello-robot/stretch_tutorials/blob/iron/rviz/aruco_detector_example.rviz) with the topics for the transform frames in the Display tree. You can visualize these topics and the robot model by running the command below in a new terminal.

+Within this tutorial package, there is an [RViz config file](https://github.com/hello-robot/stretch_tutorials/blob/humble/rviz/aruco_detector_example.rviz) with the topics for the transform frames in the Display tree. You can visualize these topics and the robot model by running the command below in a new terminal.

```{.bash .shell-prompt}

ros2 run rviz2 rviz2 -d /home/hello-robot/ament_ws/src/stretch_tutorials/rviz/aruco_detector_example.rviz

```

-Then run the [aruco_tag_locator.py](https://github.com/hello-robot/stretch_tutorials/blob/iron/stretch_ros_tutorials/aruco_tag_locator.py) node. In a new terminal, execute:

+Then run the [aruco_tag_locator.py](https://github.com/hello-robot/stretch_tutorials/blob/humble/stretch_ros_tutorials/aruco_tag_locator.py) node. In a new terminal, execute:

```{.bash .shell-prompt}

cd ament_ws/src/stretch_tutorials/stretch_ros_tutorials/

@@ -274,7 +274,7 @@ Now let's break the code down.

#!/usr/bin/env python3

```

-Every Python ROS [Node](https://docs.ros.org/en/iron/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Nodes/Understanding-ROS2-Nodes.html) will have this declaration at the top. The first line makes sure your script is executed as a Python3 script.

+Every Python ROS [Node](http://docs.ros.org/en/humble/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Nodes/Understanding-ROS2-Nodes.html) will have this declaration at the top. The first line makes sure your script is executed as a Python3 script.

```python

import rclpy

@@ -291,7 +291,7 @@ from trajectory_msgs.msg import JointTrajectoryPoint

from geometry_msgs.msg import TransformStamped

```

-You need to import `rclpy` if you are writing a ROS [Node](https://docs.ros.org/en/iron/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Nodes/Understanding-ROS2-Nodes.html). Import other python modules needed for this node. Import the `FollowJointTrajectory` from the [control_msgs.action](http://wiki.ros.org/control_msgs) package to control the Stretch robot. Import `JointTrajectoryPoint` from the [trajectory_msgs](https://github.com/ros2/common_interfaces/tree/iron/trajectory_msgs) package to define robot trajectories. The [hello_helpers](https://github.com/hello-robot/stretch_ros2/tree/iron/hello_helpers) package consists of a module that provides various Python scripts used across [stretch_ros](https://github.com/hello-robot/stretch_ros2). In this instance, we are importing the `hello_misc` script.

+You need to import `rclpy` if you are writing a ROS [Node](http://docs.ros.org/en/humble/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Nodes/Understanding-ROS2-Nodes.html). Import other python modules needed for this node. Import the `FollowJointTrajectory` from the [control_msgs.action](http://wiki.ros.org/control_msgs) package to control the Stretch robot. Import `JointTrajectoryPoint` from the [trajectory_msgs](https://github.com/ros2/common_interfaces/tree/humble/trajectory_msgs) package to define robot trajectories. The [hello_helpers](https://github.com/hello-robot/stretch_ros2/tree/humble/hello_helpers) package consists of a module that provides various Python scripts used across [stretch_ros](https://github.com/hello-robot/stretch_ros2). In this instance, we are importing the `hello_misc` script.

```python

def __init__(self):

diff --git a/ros2/follow_joint_trajectory.md b/ros2/follow_joint_trajectory.md

index b82a538..145b040 100644

--- a/ros2/follow_joint_trajectory.md

+++ b/ros2/follow_joint_trajectory.md

@@ -87,7 +87,7 @@ Now let's break the code down.

```python

#!/usr/bin/env python3

```

-Every Python ROS [Node](http://wiki.ros.org/Nodes) will have this declaration at the top. The first line makes sure your script is executed as a Python script.

+Every Python ROS [Node](https://docs.ros.org/en/humble/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Nodes/Understanding-ROS2-Nodes.html) will have this declaration at the top. The first line makes sure your script is executed as a Python script.

```python

@@ -99,7 +99,7 @@ from hello_helpers.hello_misc import HelloNode

import time

```

-You need to import rclpy if you are writing a ROS 2 Node. Import the FollowJointTrajectory from the [control_msgs.action](https://github.com/ros-controls/control_msgs/tree/master/control_msgs) package to control the Stretch robot. Import JointTrajectoryPoint from the [trajectory_msgs](https://github.com/ros2/common_interfaces/tree/rolling/trajectory_msgs/msg) package to define robot trajectories.

+You need to import rclpy if you are writing a ROS 2 Node. Import the FollowJointTrajectory from the [control_msgs.action](https://github.com/ros-controls/control_msgs/tree/master/control_msgs) package to control the Stretch robot. Import JointTrajectoryPoint from the [trajectory_msgs](https://github.com/ros2/common_interfaces/tree/humble/trajectory_msgs/msg) package to define robot trajectories.

```python

class StowCommand(HelloNode):

diff --git a/ros2/navigation_simple_commander.md b/ros2/navigation_simple_commander.md

index 015ae22..f8f3933 100644

--- a/ros2/navigation_simple_commander.md

+++ b/ros2/navigation_simple_commander.md

@@ -2,7 +2,7 @@

In this tutorial, we will work with Stretch to explore the Simple Commander Python API to enable autonomous navigation programmatically. We will also demonstrate a security patrol routine for Stretch developed using this API. If you just landed here, it might be a good idea to first review the previous tutorial which covered mapping and navigation using RViz as an interface.

## The Simple Commander Python API

-To develop complex behaviors with Stretch where navigation is just one aspect of the autonomy stack, we need to be able to plan and execute navigation routines as part of a bigger program. Luckily, the Nav2 stack exposes a Python API that abstracts the ROS layer and the Behavior Tree framework (more on that later!) from the user through a pre-configured library called the [robot navigator](https://github.com/hello-robot/stretch_ros2/blob/iron/stretch_nav2/stretch_nav2/robot_navigator.py). This library defines a class called BasicNavigator which wraps the planner, controller and recovery action servers and exposes methods such as `goToPose()`, `goToPoses()` and `followWaypoints()` to execute navigation behaviors.

+To develop complex behaviors with Stretch where navigation is just one aspect of the autonomy stack, we need to be able to plan and execute navigation routines as part of a bigger program. Luckily, the Nav2 stack exposes a Python API that abstracts the ROS layer and the Behavior Tree framework (more on that later!) from the user through a pre-configured library called the [robot navigator](https://github.com/hello-robot/stretch_ros2/blob/humble/stretch_nav2/stretch_nav2/robot_navigator.py). This library defines a class called BasicNavigator which wraps the planner, controller and recovery action servers and exposes methods such as `goToPose()`, `goToPoses()` and `followWaypoints()` to execute navigation behaviors.

Let's first see the demo in action and then explore the code to understand how this works!

@@ -16,7 +16,7 @@ stretch_robot_stow.py

```

## Setup

-Let's set the patrol route up before you can execute this demo in your map. This requires reading the position of the robot at various locations in the map and entering the co-ordinates in the array called `security_route` in the [simple_commander_demo.py](https://github.com/hello-robot/stretch_ros2/blob/iron/stretch_nav2/stretch_nav2/simple_commander_demo.py#L30) file.

+Let's set the patrol route up before you can execute this demo in your map. This requires reading the position of the robot at various locations in the map and entering the co-ordinates in the array called `security_route` in the [simple_commander_demo.py](https://github.com/hello-robot/stretch_ros2/blob/humble/stretch_nav2/stretch_nav2/simple_commander_demo.py#L30) file.

First, execute the following command while passing the correct map YAML. Then, press the 'Startup' button:

@@ -24,7 +24,7 @@ First, execute the following command while passing the correct map YAML. Then, p

ros2 launch stretch_nav2 navigation.launch.py map:=${HELLO_ROBOT_FLEET}/maps/.yaml

```

-Since we expect the first point in the patrol route to be at the origin of the map, the first coordinates should be (0.0, 0.0). Next, to define the route, the easiest way to define the waypoints in the `security_route` array is by setting the robot at random locations in the map using the '2D Pose Estimate' button in RViz as shown below. For each location, note the x, and y coordinates in the position field of the base_footprint frame and add it to the `security_route` array in [simple_commander_demo.py](https://github.com/hello-robot/stretch_ros2/blob/iron/stretch_nav2/stretch_nav2/simple_commander_demo.py#L30).

+Since we expect the first point in the patrol route to be at the origin of the map, the first coordinates should be (0.0, 0.0). Next, to define the route, the easiest way to define the waypoints in the `security_route` array is by setting the robot at random locations in the map using the '2D Pose Estimate' button in RViz as shown below. For each location, note the x, and y coordinates in the position field of the base_footprint frame and add it to the `security_route` array in [simple_commander_demo.py](https://github.com/hello-robot/stretch_ros2/blob/humble/stretch_nav2/stretch_nav2/simple_commander_demo.py#L30).

@@ -50,7 +50,7 @@ ros2 launch stretch_nav2 demo_security.launch.py map:=${HELLO_ROBOT_FLEET}/maps/

@@ -50,7 +50,7 @@ ros2 launch stretch_nav2 demo_security.launch.py map:=${HELLO_ROBOT_FLEET}/maps/

## Code Breakdown

-Now, let's jump into the code to see how things work under the hood. Follow along in the [code](https://github.com/hello-robot/stretch_ros2/blob/iron/stretch_nav2/stretch_nav2/simple_commander_demo.py) to have a look at the entire script.

+Now, let's jump into the code to see how things work under the hood. Follow along in the [code](https://github.com/hello-robot/stretch_ros2/blob/humble/stretch_nav2/stretch_nav2/simple_commander_demo.py) to have a look at the entire script.

First, we import the `BasicNavigator` class from the robot_navigator library which comes standard with the Nav2 stack. This class wraps around the planner, controller and recovery action servers.

diff --git a/ros2/obstacle_avoider.md b/ros2/obstacle_avoider.md

index b514bff..8905c3a 100644

--- a/ros2/obstacle_avoider.md

+++ b/ros2/obstacle_avoider.md

@@ -12,7 +12,7 @@ LaserScanSpeckleFilter - We use this filter to remove phantom detections in the

LaserScanBoxFilter - Stretch is prone to returning false detections right over the mobile base. While navigating, since it’s safe to assume that Stretch is not standing right above an obstacle, we filter out any detections that are in a box shape over the mobile base.

-Beware that filtering laser scans comes at the cost of a sparser scan that might not be ideal for all applications. If you want to tweak the values for your end application, you could do so by changing the values in the [laser_filter_params.yaml](https://github.com/hello-robot/stretch_ros2/blob/iron/stretch_core/config/laser_filter_params.yaml) file and by following the laser_filters package wiki. Also, if you are feeling zany and want to use the raw unfiltered scans from the laser scanner, simply subscribe to the /scan topic instead of the /scan_filtered topic.

+Beware that filtering laser scans comes at the cost of a sparser scan that might not be ideal for all applications. If you want to tweak the values for your end application, you could do so by changing the values in the [laser_filter_params.yaml](https://github.com/hello-robot/stretch_ros2/blob/humble/stretch_core/config/laser_filter_params.yaml) file and by following the laser_filters package wiki. Also, if you are feeling zany and want to use the raw unfiltered scans from the laser scanner, simply subscribe to the /scan topic instead of the /scan_filtered topic.

@@ -42,7 +42,7 @@ ros2 launch stretch_core rplidar_keepout.launch.py

## Code Breakdown:

-Let's jump into the code to see how things work under the hood. Follow along [here](https://github.com/hello-robot/stretch_ros2/blob/iron/stretch_core/stretch_core/avoider.py) to have a look at the entire script.

+Let's jump into the code to see how things work under the hood. Follow along [here](https://github.com/hello-robot/stretch_ros2/blob/humble/stretch_core/stretch_core/avoider.py) to have a look at the entire script.

The turning distance is defined by the distance attribute and the keepout distance is defined by the keepout attribute.

From 736138725d0614955db9453a5c5cd5a3afe53b8d Mon Sep 17 00:00:00 2001

From: hello-jesus

Date: Fri, 22 Sep 2023 13:30:02 -0700

Subject: [PATCH 09/15] Fix error, driver needs to be in navigation mode

---

ros2/example_3.md | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/ros2/example_3.md b/ros2/example_3.md

index 588e2d2..091971a 100644

--- a/ros2/example_3.md

+++ b/ros2/example_3.md

@@ -6,7 +6,7 @@

The aim of example 3 is to combine the two previous examples and have Stretch utilize its laser scan data to avoid collision with objects as it drives forward.

```{.bash .shell-prompt}

-ros2 launch stretch_core stretch_driver.launch.py

+ros2 launch stretch_core stretch_driver.launch.py mode:=navigation

```

Then in a new terminal type the following to activate the LiDAR sensor.

From b0e5d384dd576edaef1c9810207da4381548fdfb Mon Sep 17 00:00:00 2001

From: hello-jesus

Date: Fri, 22 Sep 2023 13:30:47 -0700

Subject: [PATCH 10/15] Added ReSpeaker tutorials

---

ros2/example_8.md | 150 ++++++++++++

ros2/example_9.md | 470 ++++++++++++++++++++++++++++++++++++

ros2/respeaker_mic_array.md | 61 +++++

ros2/respeaker_topics.md | 169 +++++++++++++

4 files changed, 850 insertions(+)

create mode 100644 ros2/example_8.md

create mode 100644 ros2/example_9.md

create mode 100644 ros2/respeaker_mic_array.md

create mode 100644 ros2/respeaker_topics.md

diff --git a/ros2/example_8.md b/ros2/example_8.md

new file mode 100644

index 0000000..7ab62b3

--- /dev/null

+++ b/ros2/example_8.md

@@ -0,0 +1,150 @@

+# Example 8

+

+This example will showcase how to save the interpreted speech from Stretch's [ReSpeaker Mic Array v2.0](https://wiki.seeedstudio.com/ReSpeaker_Mic_Array_v2.0/) to a text file.

+

+

+  +

+

+

+Begin by running the `respeaker.launch.py` file in a terminal.

+

+```{.bash .shell-prompt}

+ros2 launch respeaker_ros2 respeaker.launch.py

+```

+Then run the [speech_text.py](https://github.com/hello-robot/stretch_tutorials/blob/humble/stretch_ros_tutorials/speech_text.py) node. In a new terminal, execute:

+

+```{.bash .shell-prompt}

+cd ament_ws/src/stretch_tutorials/stretch_ros_tutorials/

+python3 speech_text.py

+```

+

+The ReSpeaker will be listening and will start to interpret speech and save the transcript to a text file. To shut down the node, type `Ctrl` + `c` in the terminal.

+

+### The Code

+

+```python

+#!/usr/bin/env python3

+

+# Import modules

+import rclpy

+import os

+from rclpy.node import Node

+

+# Import SpeechRecognitionCandidates from the speech_recognition_msgs package

+from speech_recognition_msgs.msg import SpeechRecognitionCandidates

+

+class SpeechText(Node):

+ def __init__(self):

+ super().__init__('stretch_speech_text')

+ # Initialize subscriber

+ self.sub = self.create_subscription(SpeechRecognitionCandidates, "speech_to_text", self.callback, 1)

+

+ # Create path to save captured images to the stored data folder

+ self.save_path = '/home/hello-robot/ament_ws/src/stretch_tutorials/stored_data'

+

+ # Create log message

+ self.get_logger().info("Listening to speech")

+

+ def callback(self,msg):

+ # Take all items in the iterable list and join them into a single string

+ transcript = ' '.join(map(str,msg.transcript))

+

+ # Define the file name and create a complete path name

+ file_name = 'speech.txt'

+ completeName = os.path.join(self.save_path, file_name)

+

+ # Append 'hello' at the end of file

+ with open(completeName, "a+") as file_object:

+ file_object.write("\n")

+ file_object.write(transcript)

+

+def main(args=None):

+ # Initialize the node and name it speech_text

+ rclpy.init(args=args)

+

+ # Instantiate the SpeechText class

+ speech_txt = SpeechText()

+

+ # Give control to ROS. This will allow the callback to be called whenever new

+ # messages come in. If we don't put this line in, then the node will not work,

+ # and ROS will not process any messages

+ rclpy.spin(speech_txt)

+

+if __name__ == '__main__':

+ main()

+```

+

+### The Code Explained

+Now let's break the code down.

+

+```python

+#!/usr/bin/env python3

+```

+

+Every Python ROS [Node](https://docs.ros.org/en/humble/Tutorials/Beginner-CLI-Tools/Understanding-ROS2-Nodes/Understanding-ROS2-Nodes.html) will have this declaration at the top. The first line makes sure your script is executed as a Python3 script.

+

+```python

+import rclpy

+import os

+from rclpy.node import Node

+```

+

+You need to import rclpy if you are writing a ROS Node.

+

+```python

+from speech_recognition_msgs.msg import SpeechRecognitionCandidates

+```

+

+Import `SpeechRecognitionCandidates` from the `speech_recgonition_msgs.msg` so that we can receive the interpreted speech.

+

+```python

+def __init__(self):

+ super().__init__('stretch_speech_text')

+ self.sub = self.create_subscription(SpeechRecognitionCandidates, "speech_to_text", self.callback, 1)

+```

+

+Set up a subscriber. We're going to subscribe to the topic `speech_to_text`, looking for `SpeechRecognitionCandidates` messages. When a message comes in, ROS is going to pass it to the function "callback" automatically.

+

+```python

+self.save_path = '/home/hello-robot/ament_ws/src/stretch_tutorials/stored_data'

+```

+

+Define the directory to save the text file.

+

+```python

+transcript = ' '.join(map(str,msg.transcript))

+```

+

+Take all items in the iterable list and join them into a single string named transcript.

+

+```python

+file_name = 'speech.txt'

+completeName = os.path.join(self.save_path, file_name)

+```

+

+Define the file name and create a complete path directory.

+

+```python

+with open(completeName, "a+") as file_object:

+ file_object.write("\n")

+ file_object.write(transcript)

+```

+

+Append the transcript to the text file.

+

+```python

+def main(args=None):

+ rclpy.init(args=args)

+ speech_txt = SpeechText()

+```

+

+The next line, rclpy.init() method initializes the node. In this case, your node will take on the name 'stretch_speech_text'. Instantiate the `SpeechText()` class.

+

+!!! note

+ The name must be a base name, i.e. it cannot contain any slashes "/".

+

+```python

+rclpy.spin(speech_txt)

+```

+

+Give control to ROS with `rclpy.spin()`. This will allow the callback to be called whenever new messages come in. If we don't put this line in, then the node will not work, and ROS will not process any messages.